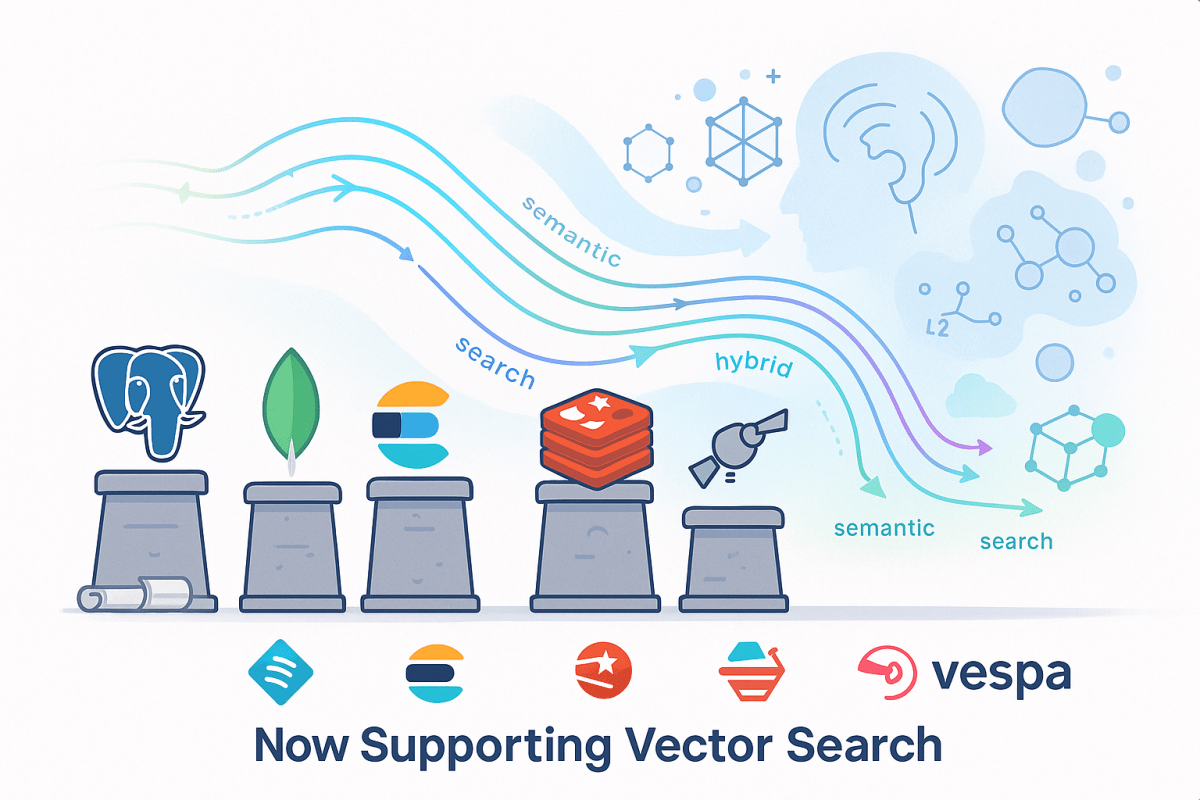

As artificial intelligence and large language models redefine how we work with data, a new class of database capabilities is gaining traction: vector search. In our previous post, we explored specialized vector databases like Pinecone, Weaviate, Qdrant, and Milvus — purpose-built to handle high-speed, large-scale similarity search. But what about teams already committed to traditional databases?

The truth is, you don’t have to rebuild your stack to start benefiting from vector capabilities. Many mainstream database vendors have introduced support for vectors, offering ways to integrate semantic search, hybrid retrieval, and AI-powered features directly into your existing data ecosystem.

This post is your guide to understanding how traditional databases are evolving to meet the needs of semantic search — and how they stack up against their vector-native counterparts.

Why Traditional Databases Matter in the Vector Era

Specialized tools may offer state-of-the-art performance, but traditional databases bring something equally valuable: maturity, integration, and trust. For organizations with existing investments in PostgreSQL, MongoDB, Elasticsearch, Redis, or Vespa, the ability to add vector capabilities without replatforming is a major win.

These systems enable hybrid queries, mixing structured filters and semantic search, and are often easier to secure, audit, and scale within corporate environments.

Let’s look at each of them in detail — not just the features, but how they feel to work with, where they shine, and what you need to watch out for.

🐘 PostgreSQL + pgvector (Vendor Site)

The pgvector extension brings vector types and similarity search into the core of PostgreSQL. It’s the fastest path to experimenting with semantic search in SQL-native environments.

- Vector fields up to 16k dimensions

- Cosine, L2, and dot product similarity

- GIN and IVFFlat indexing (HNSW via 3rd-party)

- SQL joins and hybrid queries supported

- AI-enhanced dashboards and BI

- Internal RAG pipelines

- Private deployments in sensitive industries

Great for small-to-medium workloads. With indexing, it’s usable for production — but not tuned for web-scale.

| Advantages | Weaknesses |

|---|---|

| Familiar SQL workflow | Slower than vector-native DBs |

| Secure and compliance-ready | Indexing options are limited |

| Combines relational + semantic data | Requires manual tuning |

| Open source and widely supported | Not ideal for streaming data |

Thoughts: If you already run PostgreSQL, pgvector is a no-regret move. Just don’t expect deep vector tuning or billion-record speed.

🍃 MongoDB Atlas Vector Search (Vendor Site)

MongoDB’s Atlas platform offers native vector search, integrated into its powerful document model and managed cloud experience.

- Vector fields with HNSW indexing

- Filtered search over metadata

- Built into Atlas Search framework

- Personalized content and dashboards

- Semantic product or helpdesk search

- Lightweight assistant memory

Well-suited for mid-sized applications. Performance may dip at scale, but works well in the managed environment.

| Advantages | Weaknesses |

| NoSQL-native and JSON-based | Only available in Atlas Cloud |

| Great for metadata + vector blending | Fewer configuration options |

| Easy to activate in managed console | No open-source equivalent |

Thoughts: Ideal for startups or product teams already using MongoDB. Not built for billion-record scale — but fast enough for 90% of SaaS cases.

🦾 Elasticsearch with KNN (Vendor Site)

Elasticsearch, the king of full-text search, now supports vector similarity with the KNN plugin. It’s a hybrid powerhouse when keyword relevance and embeddings combine.

- ANN search using HNSW

- Multi-modal queries (text + vector)

- Built-in scoring customization

- E-commerce recommendations

- Hybrid document search

- Knowledge base retrieval bots

Performs well at enterprise scale with the right tuning. Latency is higher than vector-native tools, but hybrid precision is hard to beat.

| Advantages | Weaknesses |

| Text + vector search in one place | HNSW-only method |

| Proven scalability and monitoring | Java heap tuning can be tricky |

| Custom scoring and filters | Not optimized for dense-only queries |

Thoughts: If you already use Elasticsearch, vector search is a logical next step. Not a pure vector engine, but extremely versatile.

🧰 Redis + Redis-Search (Vendor Site)

Redis supports vector similarity through its RediSearch module. The main benefit? Speed. It’s hard to beat in real-time scenarios.

- In-memory vector search

- Cosine, L2, dot product supported

- Real-time indexing and fast updates

- Chatbot memory and context

- Real-time personalization engines

- Short-lived embeddings and session logic

Incredible speed for small-to-medium datasets. Memory-bound unless used with Redis Enterprise or disk-backed variants.

| Advantages | Weaknesses |

| Real-time speed | Memory-constrained without upgrades |

| Ephemeral embedding support | Feature set is evolving |

| Simple to integrate and deploy | Not for batch semantic search |

Thoughts: Redis shines when milliseconds matter. For LLM tools and assistants, it’s often the right choice.

🛰 Vespa (Vendor Site)

Vespa is a full-scale engine built for enterprise search and recommendations. With native support for dense and sparse vectors, it’s a heavyweight in the semantic search space.

- Dense/sparse hybrid support

- Advanced filtering and ranking

- Online learning and relevance tuning

- Media or news personalization

- Context-rich enterprise search

- Custom search engines with ranking logic

One of the most scalable traditional engines, capable of handling massive corpora and concurrent users with ease.

| Advantages | Weaknesses |

| Built for extreme scale | Steeper learning curve |

| Sophisticated ranking control | Deployment more complex |

| Hybrid vector + metadata + rules | Smaller developer community |

Thoughts: Vespa is an engineer’s dream for large, complex search problems. Best suited to teams who can invest in custom tuning.

Summary: Which Path Is Right for You?

| Database | Best For | Scale Suitability |

| PostgreSQL | Existing analytics, dashboards | Small to medium |

| MongoDB | NoSQL apps, fast product prototyping | Medium |

| Elasticsearch | Hybrid search and e-commerce | Medium to large |

| Redis | Real-time personalization and chat | Small to medium |

| Vespa | News/media search, large data workloads | Enterprise-scale |

Final Reflections

Traditional databases may not have been designed with semantic search in mind — but they’re catching up fast. For many teams, they offer the best of both worlds: modern AI capability and a trusted operational base.

As you plan your next AI-powered feature, don’t overlook the infrastructure you already know. With the right extensions, traditional databases might surprise you.

In our next post, we’ll explore real-world architectures combining these tools, and look at performance benchmarks from independent tests.

Stay tuned — and if you’ve already tried adding vector support to your favorite DB, we’d love to hear what worked (and what didn’t).