The AI Productivity Paradox: Why Experts Slow Down

Why experts get slower, novices get faster, and context matters more than profession

The Paradox Nobody Expected

Experienced developers with five years of tenure, working on repositories exceeding one million lines of code, gained access to cutting-edge AI tools. Economists predicted they would speed up by 40%. Machine learning specialists forecast 36%. The developers themselves modestly expected a 24% boost.

The results of METR’s randomized controlled trial were the opposite: a 19% slowdown.

But that’s not the paradox. The paradox is what happened next: the same developers, measurably slower, continued to believe AI had sped them up by 20%. Objective reality and subjective perception diverged by nearly 40 percentage points.

This is no anecdote, nor a statistical anomaly. It’s a metaphor for a fundamental problem: we don’t see AI’s real impact on work. Our intuitions deceive us. Our predictions are systematically wrong. And the truth, it turns out, depends not on whether you use AI, but on the context in which you use it.

The $1.4 Trillion Iceberg

Picture an iceberg. Above the waterline—15%, the visible portion worth $211 billion. This is the tech sector: programmers, data scientists, IT specialists. This is where media attention flows, where debates about “AI replacing programmers” unfold.

Below the surface—85%, the hidden impact worth $1.2 trillion. These are financial analysts, lawyers, medical administrators, marketers, managers, educators, production planners, government employees. Research from MIT and Oak Ridge National Laboratory found that AI is technically capable of performing approximately 16% of all classified labor tasks in the American economy, and this exposure spans all three thousand counties in the country—not just the tech hubs on the coasts.

The International Monetary Fund confirms the scale: 40% of global employment is exposed to AI, rising to 60% in advanced economies. Unlike previous waves of automation that affected physical labor and assembly lines, the current wave strikes cognitive tasks—white-collar workers, office employees, those whose jobs seemed protected.

The iceberg metaphor will follow us further. Everywhere—in productivity, in quality, in costs—we encounter the same pattern: the visible picture conceals a more complex reality beneath the surface.

But who exactly wins and loses from this trillion-dollar impact?

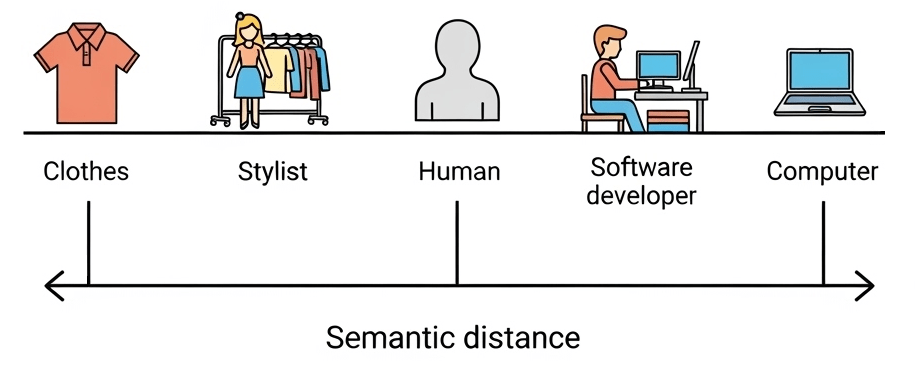

The Dialectic of Expertise: Winners and Losers

Experts Slow Down

Let’s return to METR’s study. Sixteen experienced open-source developers—people with deep knowledge of codebases over a decade old—completed 246 real tasks with and without an AI assistant. The methodology was rigorous: a randomized controlled trial, the gold standard of scientific research.

The result: minus 19% to work speed. Acceptance rate of suggestions: under 44%—more than half of AI recommendations were rejected. Nine percent of work time went solely to reviewing and cleaning up AI-generated content.

GitClear’s research confirmed the mechanism on a larger sample: when AI-generated code from less experienced developers reached senior specialists, those experts saw +6.5% increase in code review workload and −19% drop in their own productivity. The system redistributed the burden from the periphery to the team’s core.

Why does this happen? An expert looks at an AI suggestion and sees problems: “This doesn’t account for architectural constraint X,” “This violates implicit convention Y,” “This approach will break integration with component Z.” The cognitive load of filtering and fixing exceeds the savings from generation.

But That’s Not the Whole Picture

Yet data from Anthropic paints the opposite picture. High-wage specialists—lawyers, managers—save approximately two hours per task using Claude. Low-wage workers save about thirty minutes. The World Economic Forum notes rising value in precisely those “human” skills (critical thinking, leadership, empathy) that experts possess.

The same high-wage specialists who should logically slow down receive four times the time savings compared to workers.

A paradox? Not quite.

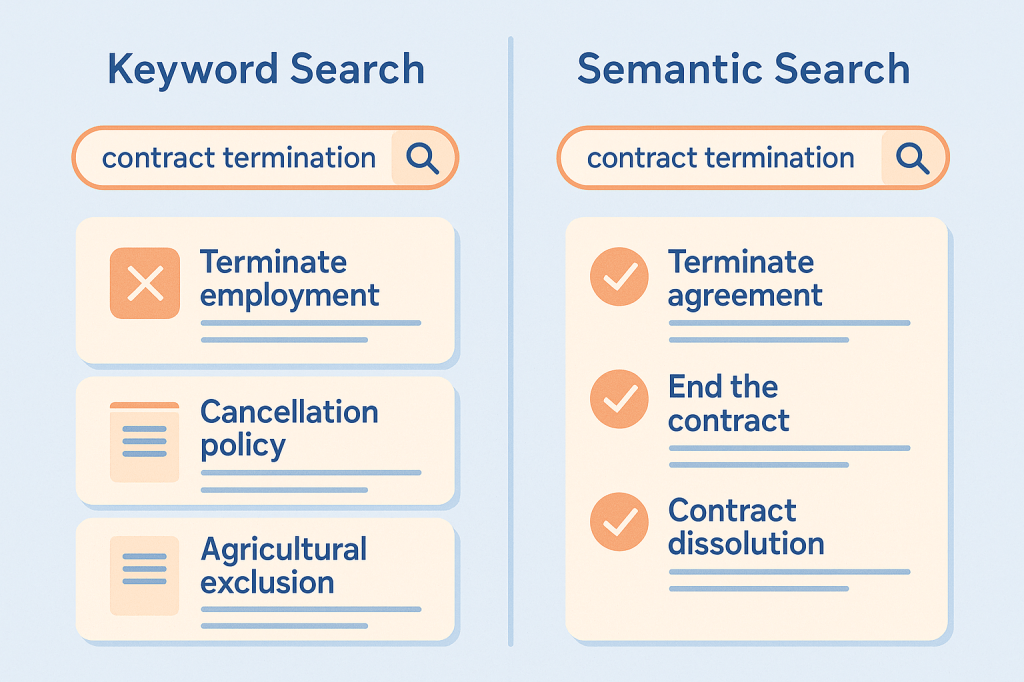

What This Means in Practice

METR’s study tested experts on complex tasks—in repositories with millions of lines of code, accumulated implicit context, architectural decisions made a decade ago. Anthropic’s data measured diverse tasks, including simple ones.

When a lawyer uses AI for a standard contract—acceleration. When a programmer applies AI to a complex architectural decision in legacy code—slowdown.

The same person can win and lose depending on the task.

This is the key insight that explains the seeming contradiction. The issue isn’t the profession, nor expertise level per se. The issue is the complexity of the specific task, the depth of required context, how structured or chaotic the problem is. Simple, routine operations speed up for everyone. Complex, context-dependent tasks can slow down even—especially—experts.

An important caveat: the expertise paradox is documented in detail for the IT sector. For lawyers, doctors, and financial analysts, it remains a hypothesis requiring empirical validation.

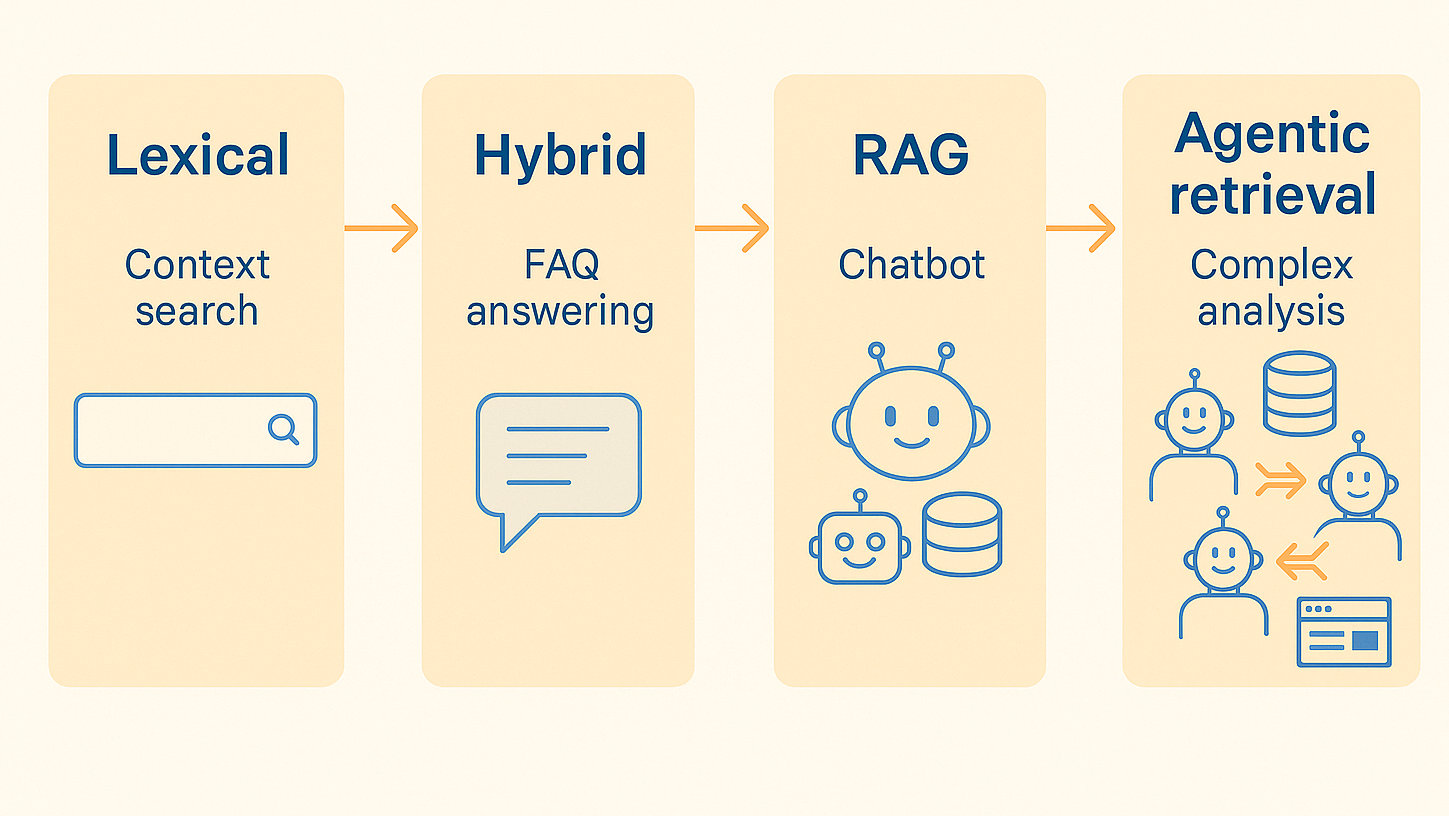

Augmentation vs. Displacement: No Apocalypse, But…

Augmentation Dominates

Seven key sources—OECD, WEF, McKinsey, IMF, Brookings, ILO, Goldman Sachs—form a robust consensus: AI’s primary vector is augmenting human labor, not replacing it.

The World Economic Forum forecasts +35 million new jobs by 2030. Brookings, analyzing real U.S. labor market data, finds no signs of an “apocalypse”—mass layoffs at the macro level simply aren’t happening. Goldman Sachs reports: AI has already added approximately $160 billion to U.S. GDP since 2022, and this is just the beginning.

Transformation instead of destruction. Task restructuring instead of profession elimination. An optimistic picture.

Yet Displacement Is Already Real in Specific Niches

Beneath the surface of macro-statistics lies a different reality.

Upwork recorded −2% contracts and −5% revenue for freelancers in copywriting and translation categories. This isn’t a catastrophe, but it is the first statistically significant cracks. Real displacement, not theoretical risk.

Goldman Sachs, for all its optimism about GDP growth, estimates the long-term risk of complete displacement at 6–7% of jobs. OECD indicates: 27% of jobs are in the high-risk automation zone.

No apocalypse—but the first casualties already exist.

The Pattern Depends on Task Type, Not Profession

Copywriting is a profession. But within it, there’s routine copywriting (product descriptions, standard texts) and complex creative copywriting (brand concepts, emotional narratives). Upwork’s data shows displacement of the first type. The second remains with humans.

Software development is a profession. But within it, there are simple tasks (boilerplate code, standard functions) and complex architectural decisions. The former accelerate for everyone. The latter slow down experts.

Same profession—different fates for different tasks.

Context again proves key. Routine cognitive tasks (even “creative” ones) are candidates for displacement. Complex, context-dependent tasks are augmentation territory. The boundary runs not between professions, but within them.

The Productivity Dialectic: Trillions and Their Hidden Cost

Trillions in Added Value

The numbers are impressive. McKinsey promises $2.6–4.4 trillion in annual added value for the global economy. Anthropic, creator of Claude, reports 80% reduction in task completion time. Goldman Sachs forecasts a doubling of labor productivity growth rates.

Automation potential: 60–70% of work time. Four functions—marketing, sales, software development, and R&D—generate 75% of all value from generative AI adoption.

The productivity revolution economists talked about appears to have begun.

Hidden Costs

GitClear analyzed 153 million changed lines of code over four years. The results are concerning:

- Code churn is rising—code that gets deleted or rewritten less than two weeks after creation.

- The share of refactoring (improving code structure) is falling—from 16% to 9%.

- For the first time in 2024, the share of copy-pasted code exceeded the share of refactoring.

AI encourages writing code but not maintaining it, not improving architecture, not ensuring long-term quality.

Research records +6.5% workload on experts for reviewing AI-generated content. OECD cautiously notes risks of “work intensification”—a euphemism for rising stress and cognitive overload. A Purdue University study found: 52% of ChatGPT responses to programming questions contain errors, yet users fail to notice them in 39% of cases.

We Measure Output While Missing Outcome

The iceberg metaphor applies again. Visible: lines of code, completed tasks, saved hours. Hidden: technical debt, maintainability, decision quality, expert workload.

Productivity metrics measure output (what’s produced). They don’t measure outcome (what value this creates in the long term). When a company sees a 50% increase in completed tasks, it doesn’t see that the accumulating technical debt will require double the investment a year later.

Short-term gains at the cost of long-term problems—a classic pattern concealed behind optimistic statistics.

This doesn’t negate AI’s real benefits. But it reminds us: the full picture includes the invisible part of the iceberg.

Inequality as an Inevitable Consequence

All the patterns described converge at one point: AI amplifies existing inequality along several axes simultaneously.

Wage gap. High-wage specialists save about 2 hours per task, low-wage workers—about 30 minutes. Those whose work is already valuable receive more assistance. OECD documents the formation of a wage premium for AI skills—the gap between those who master the technology and everyone else will widen.

Gender. ILO reports: women are overrepresented in administrative and clerical roles—professions with high automation exposure. Labor market transformation may hit them disproportionately hard.

Geography. Advanced economies (60% exposure) face greater impact than developing ones (40% globally). The paradox: wealthy countries with larger shares of cognitive work are more vulnerable to AI-driven transformation. But they also have more resources for adaptation.

Skills. The expertise paradox adds a strange dimension: in the short term, novices benefit more than experts. But a long-term risk emerges: if AI handles the routine tasks through which novices learn, how do we develop the next generation of experts? Skill atrophy is a hidden threat beneath the surface of today’s gains.

All of this follows from one underlying pattern: context determines outcome. The same factors (high income, cognitive work, developed economy) create both maximum opportunities for augmentation and maximum vulnerability to displacement. Whether you win or lose depends on which specific tasks comprise your work and how you adapt.

Return to the Paradox

Let’s return to the image we started with.

Experienced developers slowed down by 19% but were convinced they had sped up by 20%. Objective reality and subjective perception diverged by 40 percentage points.

This cognitive bias is a metaphor for the entire problem. None of us see the reality of AI’s impact on work. Our assessments are distorted by optimism, hype, failure to grasp nuances.

Macro forecasts promise trillions of dollars in growth. Micro studies show expert slowdowns and technical debt accumulation. Both are true. The difference lies in context, in the level of analysis, in which part of the iceberg we’re looking at.

The main takeaway: AI’s impact depends on context—the same person can win and lose depending on the task. This explains all the apparent paradoxes:

- Experts slow down on complex tasks but may speed up on simple ones.

- High-wage professions receive more assistance but also face greater exposure risk.

- Augmentation dominates overall, but displacement is real in specific niches.

- Productivity rises by the metrics, yet hidden costs accumulate beneath the surface.

We don’t face a choice between “embrace AI or reject it.” We face the necessity of understanding nuances: which tasks accelerate, which slow down; where augmentation applies, where displacement; what gets measured and what lies hidden underwater.

The iceberg is real. The visible 15% shapes the discourse. The hidden 85% determines the future.

And as with real icebergs—ignoring what’s below the waterline has consequences.

Влияние искусственного интеллекта на труд: парадоксы, которые меняют всё

Почему эксперты замедляются, новички ускоряются, и контекст решает больше, чем профессия

Парадокс, который никто не ожидал

Опытные разработчики с пятилетним стажем, работавшие над репозиториями размером более миллиона строк кода, получили доступ к передовым инструментам искусственного интеллекта. Экономисты прогнозировали ускорение их работы на 40%. Специалисты по машинному обучению — на 36%. Сами разработчики скромно ожидали 24% прироста.

Результат рандомизированного контролируемого испытания METR оказался обратным: 19% замедление.

Но это ещё не парадокс. Парадокс в том, что произошло после: те же разработчики, измеримо замедлившиеся, продолжали верить, что ИИ ускорил их работу на 20%. Объективная реальность и субъективное восприятие разошлись почти на 40 процентных пунктов.

Это не анекдот и не статистическая аномалия. Это метафора фундаментальной проблемы: мы не видим реального влияния искусственного интеллекта на труд. Наши интуиции обманывают нас. Наши прогнозы систематически ошибаются. А истина, как выясняется, зависит не от того, используете ли вы ИИ, а от того, в каком контексте вы его используете.

Айсберг стоимостью 1.4 триллиона долларов

Представьте айсберг. Над поверхностью воды — 15%, видимая часть стоимостью $211 миллиардов. Это технологический сектор: программисты, специалисты по данным, ИТ-специалисты. Именно сюда направлено внимание медиа, именно здесь разворачиваются дискуссии о «замещении программистов искусственным интеллектом».

Под водой — 85%, скрытое влияние стоимостью $1.2 триллиона. Это финансовые аналитики, юристы, медицинские администраторы, маркетологи, менеджеры, преподаватели, специалисты по планированию производства, государственные служащие. Исследование MIT и Oak Ridge National Laboratory показало: ИИ технически способен выполнять около 16% всех классифицированных трудовых задач американской экономики, и это влияние распределено по всем трём тысячам округов страны, а не только по технологическим хабам побережья.

Международный валютный фонд подтверждает масштаб: 40% глобальной занятости подвержено влиянию ИИ, причём в развитых экономиках эта цифра достигает 60%. В отличие от предыдущих волн автоматизации, которые затрагивали физический труд и производственные линии, текущая волна бьёт по когнитивным задачам — по «белым воротничкам», по офисным работникам, по тем, чья работа казалась защищённой.

Метафора айсберга будет преследовать нас дальше. Везде — в продуктивности, в качестве, в издержках — мы будем сталкиваться с одним и тем же паттерном: видимая картина скрывает более сложную реальность под поверхностью.

Но кто именно выигрывает и проигрывает от этого триллионного влияния?

Диалектика экспертизы: кто выигрывает, кто проигрывает

Эксперты замедляются

Вернёмся к исследованию METR. Шестнадцать опытных разработчиков проектов с открытым исходным кодом — людей с глубоким знанием кодовых баз возрастом более десяти лет — выполняли 246 реальных задач с ИИ-ассистентом и без него. Методология была строгой: рандомизированное контролируемое испытание, золотой стандарт научных исследований.

Результат: минус 19% к скорости работы. Доля принятых предложений — менее 44%: больше половины рекомендаций ИИ отклонялись. 9% рабочего времени уходило только на проверку и очистку контента, сгенерированного ИИ.

Исследование GitClear подтвердило механизм на большей выборке: когда ИИ-код от менее опытных разработчиков попадал к ведущим специалистам, те получали +6.5% нагрузки на проверку кода и −19% падение собственной продуктивности. Система перераспределяла бремя с периферии к ядру команды.

Почему это происходит? Эксперт смотрит на предложение ИИ и видит проблемы: «Это не учитывает архитектурное ограничение X», «Здесь нарушается неявное соглашение Y», «Этот подход сломает интеграцию с компонентом Z». Когнитивная нагрузка на фильтрацию и исправление превышает экономию на генерации.

Но это не вся картина

Однако данные Anthropic рисуют противоположную картину. Высокооплачиваемые специалисты — юристы, менеджеры — экономят около двух часов на задачу благодаря Claude. Низкооплачиваемые работники — около тридцати минут. World Economic Forum отмечает рост ценности именно «человеческих» навыков (критическое мышление, лидерство, эмпатия), которыми владеют эксперты.

Те же высокооплачиваемые специалисты, которые по логике должны замедляться, получают в четыре раза больше экономии времени, чем рабочие.

Парадокс? Не совсем.

Что это значит на практике

Исследование METR тестировало экспертов на сложных задачах — в репозиториях с миллионами строк кода, накопленным неявным контекстом, архитектурными решениями десятилетней давности. Данные Anthropic измеряли разнообразные задачи, включая простые.

Когда юрист использует ИИ для стандартного договора — ускорение. Когда программист применяет ИИ для сложного архитектурного решения в унаследованном коде — замедление.

Один и тот же человек может выиграть и проиграть в зависимости от задачи.

Это главная идея, объясняющая кажущееся противоречие. Проблема не в профессии и не в уровне экспертизы как таковых. Проблема в сложности конкретной задачи, в глубине требуемого контекста, в структурированности или хаотичности проблемы. Простые, рутинные операции ускоряются для всех. Сложные, контекстуально зависимые задачи могут замедлять даже — особенно — экспертов.

Важное уточнение: парадокс экспертизы детально задокументирован для ИТ-сектора. Для юристов, врачей, финансовых аналитиков он остаётся гипотезой, требующей эмпирической проверки.

Дополнение versus замещение: апокалипсиса нет, но…

Дополнение доминирует

Семь ключевых источников — OECD, WEF, McKinsey, IMF, Brookings, ILO, Goldman Sachs — формируют устойчивый консенсус: основной вектор влияния ИИ — дополнение человеческого труда, а не замещение.

World Economic Forum прогнозирует создание +35 миллионов новых рабочих мест к 2030 году. Brookings, анализируя реальные данные рынка труда США, не обнаруживает признаков «апокалипсиса» — массовых увольнений на макроуровне нет. Goldman Sachs фиксирует: ИИ уже добавил около $160 миллиардов к ВВП США с 2022 года, и это только начало.

Трансформация вместо разрушения. Реструктуризация задач вместо уничтожения профессий. Оптимистичная картина.

Однако вытеснение уже реально в отдельных нишах

Под поверхностью макро-статистики — другая реальность.

Платформа Upwork зафиксировала −2% контрактов и −5% доходов фрилансеров в категориях копирайтинга и переводов. Это не катастрофа, но это первые статистически значимые трещины. Реальное замещение, а не теоретический риск.

Goldman Sachs, при всём оптимизме о росте ВВП, оценивает долгосрочный риск полного замещения в 6–7% рабочих мест. OECD указывает: 27% рабочих мест находятся в зоне высокого риска автоматизации.

Апокалипсиса нет — но первые жертвы уже есть.

Паттерн зависит от типа задачи, а не профессии

Копирайтинг — профессия. Но внутри неё есть рутинный копирайтинг (описания товаров, стандартные тексты) и сложный креативный копирайтинг (концепции бренда, эмоциональные нарративы). Данные Upwork показывают вытеснение первого типа. Второй остаётся за человеком.

Разработка ПО — профессия. Но внутри неё есть простые задачи (шаблонный код, типовые функции) и сложные архитектурные решения. Первые ускоряются у всех. Вторые замедляют экспертов.

Та же профессия — разные судьбы разных задач.

Контекст снова оказывается ключевым. Рутинные когнитивные задачи (даже «творческие») — кандидаты на вытеснение. Сложные, контекстуально зависимые задачи — территория дополнения. Граница проходит не между профессиями, а внутри них.

Диалектика продуктивности: триллионы и их скрытая цена

Триллионы добавленной стоимости

Цифры впечатляют. McKinsey обещает $2.6–4.4 триллиона ежегодной добавленной стоимости для мировой экономики. Anthropic, создатель Claude, сообщает о 80% сокращении времени выполнения задач. Goldman Sachs прогнозирует удвоение темпов роста производительности труда.

Потенциал автоматизации — 60–70% рабочего времени. Четыре функции — маркетинг, продажи, разработка ПО, исследования и разработки — генерируют 75% всей ценности от внедрения генеративного ИИ.

Революция производительности, о которой говорили экономисты, кажется, началась.

Скрытые издержки

GitClear проанализировала 153 миллиона изменённых строк кода за четыре года. Результаты тревожны:

- Растут переделки кода — код, который удаляется или переписывается менее чем через две недели после создания.

- Падает доля рефакторинга (улучшения структуры) — с 16% до 9%.

- Впервые в 2024 году доля скопированного и вставленного кода превысила долю рефакторинга.

ИИ стимулирует написание кода, но не его поддержку, не улучшение архитектуры, не долгосрочное качество.

Исследования фиксируют +6.5% нагрузки на экспертов для проверки ИИ-контента. OECD осторожно отмечает риски «интенсификации труда» — эвфемизм для роста стресса и когнитивной перегрузки. Исследование Purdue показало: 52% ответов ChatGPT на вопросы по программированию содержат ошибки, но пользователи не замечают их в 39% случаев.

Мы измеряем объём выпуска, упуская качество результата

Метафора айсберга снова уместна. Видимое — строки кода, выполненные задачи, сэкономленные часы. Скрытое — технический долг, поддерживаемость, качество решений, нагрузка на экспертов.

Метрики продуктивности измеряют объём выпуска (что произведено). Они не измеряют итоговую ценность (какую пользу это создаёт в долгосрочной перспективе). Когда компания видит рост объёма выполненных задач на 50%, она не видит, что накапливающийся технический долг потребует двойных затрат через год.

Краткосрочный выигрыш ценой долгосрочных проблем — классический паттерн, который скрывается за оптимистичной статистикой.

Это не отменяет реальных выгод ИИ. Но напоминает: полная картина включает невидимую часть айсберга.

Неравенство как неизбежное следствие

Все описанные паттерны сходятся в одной точке: ИИ усиливает существующее неравенство по нескольким осям одновременно.

Зарплатный разрыв. Высокооплачиваемые специалисты экономят около 2 часов на задачу, низкооплачиваемые — около 30 минут. Те, чья работа уже ценна, получают больше помощи. OECD фиксирует формирование зарплатной премии за навыки работы с ИИ — разрыв между владеющими технологией и остальными будет расти.

Гендер. ILO указывает: женщины перепредставлены в административных и канцелярских ролях — профессиях с высокой подверженностью автоматизации. Трансформация рынка труда может ударить по ним непропорционально сильно.

География. Развитые экономики (60% затронутости) находятся под большим влиянием, чем развивающиеся (40% глобально). Парадокс: богатые страны с большей долей когнитивного труда — более уязвимы перед трансформацией, вызванной ИИ. Но у них же больше ресурсов для адаптации.

Навыки. Парадокс экспертизы добавляет странное измерение: в краткосрочной перспективе новички выигрывают больше экспертов. Но долгосрочно возникает риск: если ИИ выполняет рутинные задачи, через которые учатся новички, как формировать следующее поколение экспертов? Атрофия навыков — скрытая угроза под поверхностью сегодняшних выгод.

Всё это — следствие одной закономерности: контекст определяет исход. Те же факторы (высокий доход, когнитивная работа, развитая экономика) создают и максимальные возможности для дополнения, и максимальную уязвимость для вытеснения. Выиграете вы или проиграете — зависит от того, какие именно задачи составляют вашу работу и как вы адаптируетесь.

Возвращение к парадоксу

Вернёмся к образу, с которого мы начали.

Опытные разработчики замедлились на 19%, но были убеждены, что ускорились на 20%. Объективная реальность и субъективное восприятие разошлись на 40 процентных пунктов.

Это когнитивное искажение — метафора для всей проблемы. Мы все не видим реальность влияния ИИ на труд. Наши оценки искажены оптимизмом, хайпом, непониманием нюансов.

Макро-прогнозы обещают триллионы долларов прироста. Микро-исследования показывают замедление экспертов и накопление технического долга. И то, и другое — правда. Разница в контексте, в уровне анализа, в том, какую часть айсберга мы видим.

Главный вывод: влияние ИИ зависит от контекста — один и тот же человек может выиграть и проиграть в зависимости от задачи. Это объясняет все кажущиеся парадоксы:

- Эксперты замедляются в сложных задачах, но могут ускоряться в простых.

- Высокооплачиваемые профессии получают больше помощи, но и несут больший риск затронутости.

- Дополнение доминирует в целом, но вытеснение реально в конкретных нишах.

- Продуктивность растёт по метрикам, но скрытые издержки накапливаются под поверхностью.

Мы стоим не перед выбором «принять ИИ или отвергнуть». Мы стоим перед необходимостью понимать нюансы: какие задачи ускоряются, какие замедляются; где дополнение, где вытеснение; что измеряется, а что скрыто под водой.

Айсберг реален. Видимые 15% формируют дискурс. Скрытые 85% определяют будущее.

И как с настоящими айсбергами — игнорирование подводной части чревато последствиями.