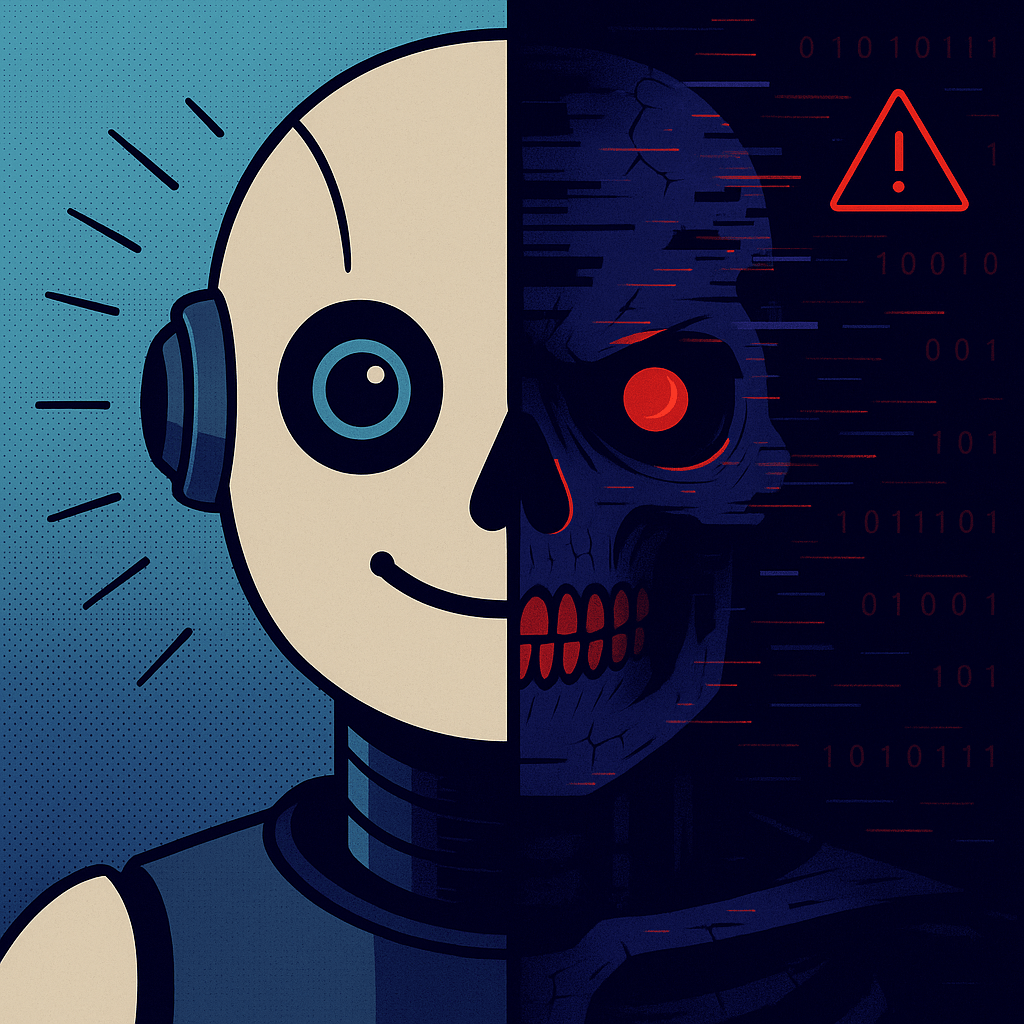

Imagine: you use ChatGPT or Claude every day. For work, for analysis, for decision-making. You feel more productive. You’re confident you’re in control.

Now—a 2025 study.

666 people, active AI tool users. Researchers from Societies journal gave them critical thinking tests: reading comprehension, logical reasoning, decision-making. Key point—none of the tasks involved using AI. Just regular human thinking.

The result was shocking: correlation r = -0.68 between AI usage frequency and critical thinking scores (Gerlich, 2025).

What does this mean in practice? Active AI users showed significantly lower critical thinking—not in their work with AI, but in everything they did. Period.

Here’s the thing: Using AI doesn’t just create dependence on AI. It changes how you think—even when AI isn’t around.

But researchers found something important: one factor predicted who would avoid this decline.

Not awareness. Not education. Not experience.

A specific practice taking 60 seconds.

Over the past two years—in research from cognitive science to behavioral economics—a clear pattern emerged: practices exist that don’t just reduce bias, but actively maintain your critical capacity when working with AI.

We’ll break down this framework throughout the article—a three-stage system for documenting thinking before, during, and after AI interaction. Element by element. Through the research itself.

And we’ll start with a study you should have heard about—but somehow didn’t.

The Study That Should Have Made Headlines

December 2024. Glickman and Sharot publish research in Nature Human Behaviour—one of the most prestigious scientific journals.

72 citations in four weeks. Four times higher than the typical rate for this journal.

Zero mentions in mainstream media. Zero in tech media.

Why the silence? Perhaps because the results are too uncomfortable.

Here’s what they found:

AI amplifies your existing biases by 15-25% MORE than interaction with other humans.

Surprising fact, but the most interesting thing is that this isn’t the most critical finding.

The most critical—a phenomenon they called “bias inheritance.” People worked with AI. Then moved to tasks WITHOUT AI. And what? They reproduced the same exact errors the AI made.

Biased thinking persisted for weeks!

Imagine: you carry an invisible advisor with you, continuing to whisper bad advice—even after you’ve closed the chat window.

This isn’t about AI having biases. We already know that.

This is about you internalizing these biases. And carrying them forward.

Why This Works

Social learning and mimicry research shows: people unconsciously adopt thinking patterns from sources they perceive as:

- Authoritative

- Successful

- Frequently encountered

(Chartrand & Bargh, 1999; Cialdini & Goldstein, 2004)

AI meets all three criteria simultaneously:

- You interact with AI more often than any single mentor

- It never signals uncertainty (even when wrong)

- You can’t see the reasoning process to identify flaws

Real case: 1,200 developers, 2024 survey. Six months working with GitHub Copilot. What happened? Engineers unconsciously adopted Copilot’s concise comment style.

Code reviewers began noticing:

“Your comments used to explain why. Now they just describe what.”

Developers didn’t change their style consciously. They didn’t even notice the changes. They simply internalized Copilot’s pattern—and took it with them.

775 Managers

February 2025. Experiment: 775 managers evaluate employee performance.

Conditions: AI provides initial ratings. Managers are explicitly warned about anchoring bias and asked to make independent final decisions.

What happened:

- AI shows rating: 7/10

- Manager thinks: “OK, I’ll evaluate this independently”

- Manager’s final rating: 7.2/10

Average deviation from AI rating: 0.2 points.

They believed they made an independent decision. Reality? They just slightly adjusted AI’s starting point.

But here’s what’s interesting: Managers who wrote their assessment BEFORE seeing AI’s rating clustered around AI’s number three times less often.

This is the first element of what actually works: establish an independent baseline before AI speaks.

Three Mechanisms Creating Bias Inheritance

Okay, now to the mechanics. How exactly does this work?

Mechanism 1: Confidence Calibration Failure

May 2025. CFA Institute analysts gained access to a leaked Claude system prompt.

24,000 tokens of instructions. Explicit design commands:

- “Suppress contradiction” (suppress contradiction)

- “Amplify fluency” (amplify fluency)

- “Bias toward consensus” (bias toward consensus)

This is one documented example. But the pattern appears everywhere—we see it in user reactions.

December 2024. OpenAI releases model o1—improved reasoning, more cautious tone.

User reactions:

- “Too uncertain”

- “Less helpful”

- “Too many caveats”

Result? OpenAI returned GPT-4o as the primary model—despite o1’s superior accuracy.

The conclusion is inevitable: users preferred confidently wrong answers to cautiously correct ones.

Why this happens: AI is designed (or selected by users) to sound more confident than warranted. Your calibration of “how confidence sounds” gets distorted. You begin to expect and trust unwarranted confidence.

And here’s what matters: research shows people find it cognitively easier to process agreement than contradiction (Simon, 1957; Wason, 1960). AI that suppresses contradiction exploits this fundamental cognitive preference.

How this looks in practice? Consider a typical scenario that repeats daily in the financial industry.

A financial analyst asks Claude about an emerging market thesis.

Claude gives five reasons why the thesis is sound.

The analyst presents to the team with high confidence.

Question from the floor: “Did you consider counterarguments?”

Silence. The analyst realizes: he never looked for reasons why the thesis might be WRONG.

Not a factual error. A logical error in the reasoning process.

What works: Analysts who explicitly asked AI to argue AGAINST their thesis first were 35% less likely to present overconfident recommendations with hidden risks.

This is the second element: the critic technique.

Mechanism 2: Anchoring Cascade

2025 research tested all four major LLMs: GPT-4, Claude 2, Gemini Pro, GPT-3.5.

Result: ALL four create significant anchoring effects.

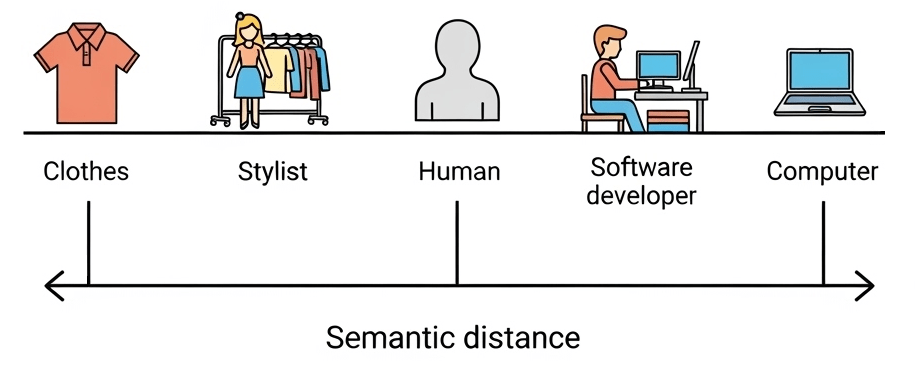

The first number or perspective AI mentions becomes your psychological baseline.

And here’s what’s critical: anchoring affects not only the immediate decision. Classic Tversky and Kahneman research showed this effect long before AI appeared: when people were asked to estimate the percentage of African countries in the UN, their answers clustered around a random number obtained by spinning a roulette wheel before the question. Number 10 → average estimate 25%. Number 65 → average estimate 45%.

People knew the wheel was random. Still anchored.

It creates a reference point that influences subsequent related decisions—even after you’ve forgotten the original AI interaction (Tversky & Kahneman, 1974). With AI, this ancient cognitive bug amplifies because the anchor appears relevant and authoritative.

Medical case: March 2025. 50 American physicians analyze chest pain video vignettes (Goh et al., Communications Medicine).

Process: physicians make initial diagnosis (without AI) → receive GPT-4 recommendation → make final decision.

Results:

- Accuracy improved: from 47-63% to 65-80%—Excellent!

- BUT: physicians’ final decisions clustered around GPT-4’s initial suggestion

Even when physicians initially had different clinical judgment, GPT-4’s recommendation became a new reference point they adjusted from.

Why even experts fall for this: These are domain experts. Years of training. Medical school, residency, practice. Still couldn’t avoid the anchoring effect—once they saw AI’s assessment. They believed they were evaluating independently. Reality—they anchored on AI’s confidence.

What works: Physicians who documented their initial clinical assessment BEFORE receiving AI recommendations maintained more diagnostic diversity and caught cases where AI reasoning was incomplete 38% more often.

This is the third element: baseline documentation before AI.

Mechanism 3: Confirmation Amplification

2024 study: psychologists use AI for triage decisions in mental health.

Result: psychologists trusted AI recommendations significantly MORE when they matched their initial clinical judgment.

Statistics:

- When AI agreed: confidence grew by +34%, accepted recommendations in 89% of cases

- When AI disagreed: questioned AI’s validity, accepted recommendations in only 42% of cases

How the mechanism works:

- You form a hypothesis

- Ask AI for analysis

- If AI agrees: “AI confirms my thinking” → high confidence, less skepticism

- If AI disagrees: “AI might be wrong” → discount AI, keep original view

Net effect: AI becomes a confirmation mirror, not a critical reviewer.

Confirmation bias research shows: people prefer to seek information confirming existing beliefs (Nickerson, 1998). AI amplifies this by making confirming information instantly accessible with an authoritative tone.

Echo chamber effect: Psychologists believed they were using AI to improve accuracy. In reality, they were using AI to confirm existing biases. Retrospective reviews showed: they couldn’t even identify when confirmation bias was occurring. They remembered “carefully considering AI input”—but didn’t recognize selective trust patterns.

What works:

- Clinical teams that asked AI to challenge their initial assessment first: 40% better accuracy in cases where original judgment was wrong

- Weekly retrospective reviews with questions “When did we trust AI? When did we discount it?”: 31% better diagnostic calibration

These are the fourth and fifth elements: challenger technique + post-AI pattern analysis.

Here’s the critical insight:

The examined mechanisms don’t work independently—they form a cascade:

- Confident AI creates a strong anchor (Mechanism 1)

- You adjust from that anchor instead of thinking independently (Mechanism 2)

- You seek AI outputs confirming the anchored view (Mechanism 3)

- The cycle repeats—each iteration makes you less critical

Why “Just Being Aware” Doesn’t Work

Alright, you might say. Now I know about the mechanisms. I’ll be aware. I’ll be more careful.

Problem: this doesn’t work.

A 2025 study in SAGE Journals (DOI: 10.1177/0272989X251346788) tested exactly this.

Experiment design:

- Control group: used AI normally

- Experimental group: explicitly warned—”AI can be biased, be careful”

Result? Bias reduction in experimental group: 6.9%. Statistically? Nearly zero. In practical terms? Insignificant.

Remember those 775 managers:

- They were warned about anchoring

- Still clustered around AI ratings (average deviation: 0.2 points)

- They believed they made independent decisions (self-assessed confidence: 8.1 out of 10)

Experiments with physicians:

- ALL knew about confirmation bias

- Still trusted AI 23% more when it agreed with them

- In retrospective recognition tests, only 14% could identify bias in their own decisions

Why? Research shows: these biases operate at an unconscious level (Kahneman, 2011, Thinking, Fast and Slow; Wilson, 2002, Strangers to Ourselves).

Your thinking system is divided into two levels:

- System 1: fast, automatic, unconscious—where biases live

- System 2: slow, conscious, logical—where your sense of control lives

Metacognitive awareness ≠ behavioral change.

It’s like an optical illusion: You learned the trick. You know how it works. You still see the illusion. Knowing the mechanism doesn’t make it disappear.

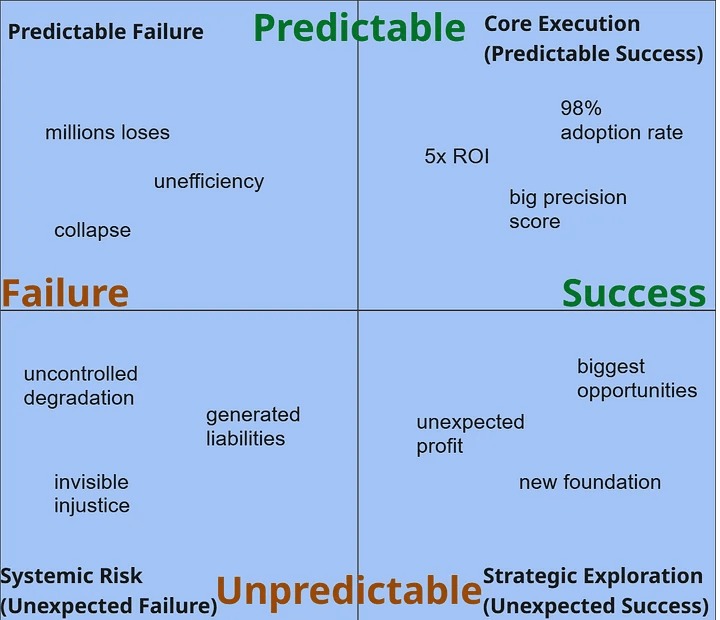

What Actually Changes Outcomes

Here’s the good news: researchers didn’t stop at “awareness doesn’t work.” They went further. What structural practices create different outcomes? Over the past two years—through dozens of studies—a clear pattern emerged.

Here’s what actually works:

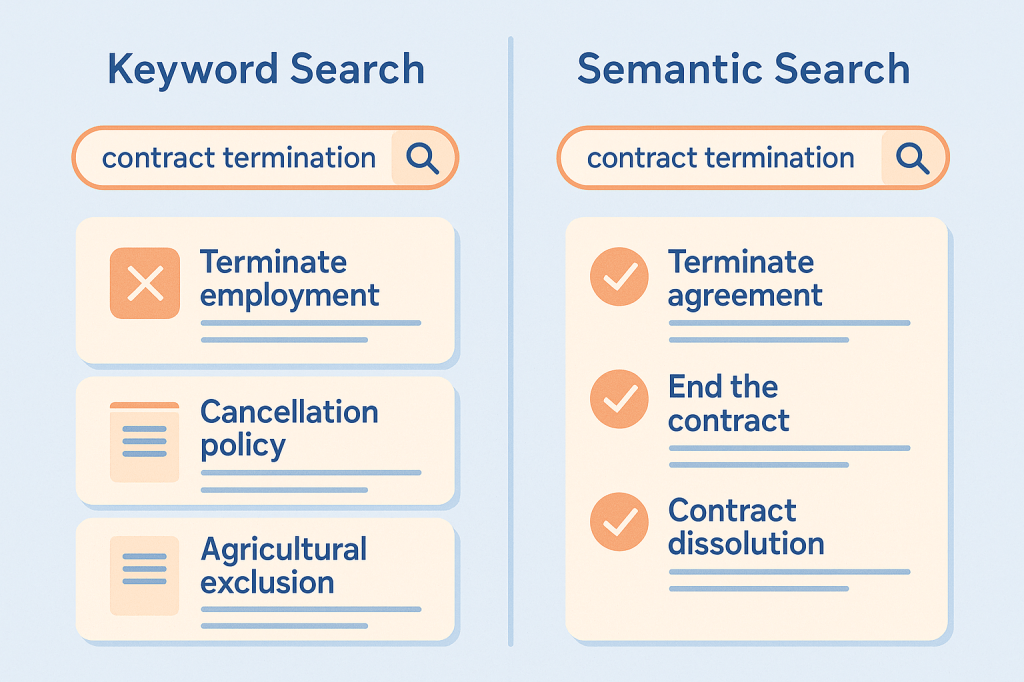

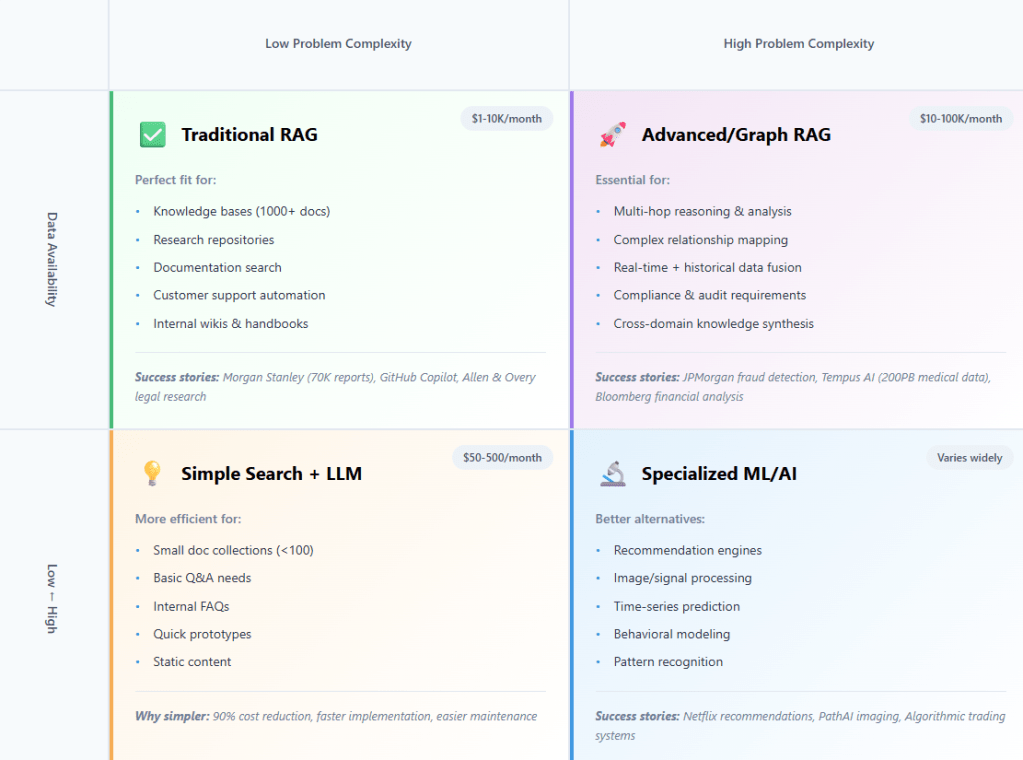

Pattern 1: Baseline Before AI

Essence: Document your thinking BEFORE asking AI.

2024 study: 390 participants make purchase decisions. Those who recorded their initial judgment BEFORE viewing AI recommendations showed significantly less anchoring bias.

Legal practice: lawyers documented a 3-sentence case theory before using AI tools.

Result: 52% more likely to identify gaps in AI-suggested precedents.

Mechanism: creates an independent reference point AI can’t redefine.

Pattern 2: Critic Technique

Essence: Ask AI to challenge your idea first—then support it.

Metacognitive sensitivity research (Lee et al., PNAS Nexus, 2025): AI providing uncertainty signals improves decision accuracy.

Financial practice: analysts asked AI to argue AGAINST their thesis first—before supporting it.

Result: 35% fewer significant analytical oversights.

Mechanism: forces critical evaluation instead of confirmation.

Pattern 3: Time Delay

Essence: Don’t make decisions immediately after getting AI’s response.

2024 review: AI-assisted decisions in behavioral economics.

Data:

- Immediate decisions: 73% stay within 5% of AI’s suggestion

- Ten-minute delay: only 43% remain unchanged

Mechanism: delay allows alternative information to compete with AI’s initial framing, weakens anchoring.

Pattern 4: Cross-Validation Habit

Essence: Verify at least ONE AI claim independently.

MIT researchers developed verification systems—speed up validation by 20%, help spot errors.

Result: professionals who verify even one AI claim show 40% less error propagation.

Mechanism: single verification activates skeptical thinking across all outputs.

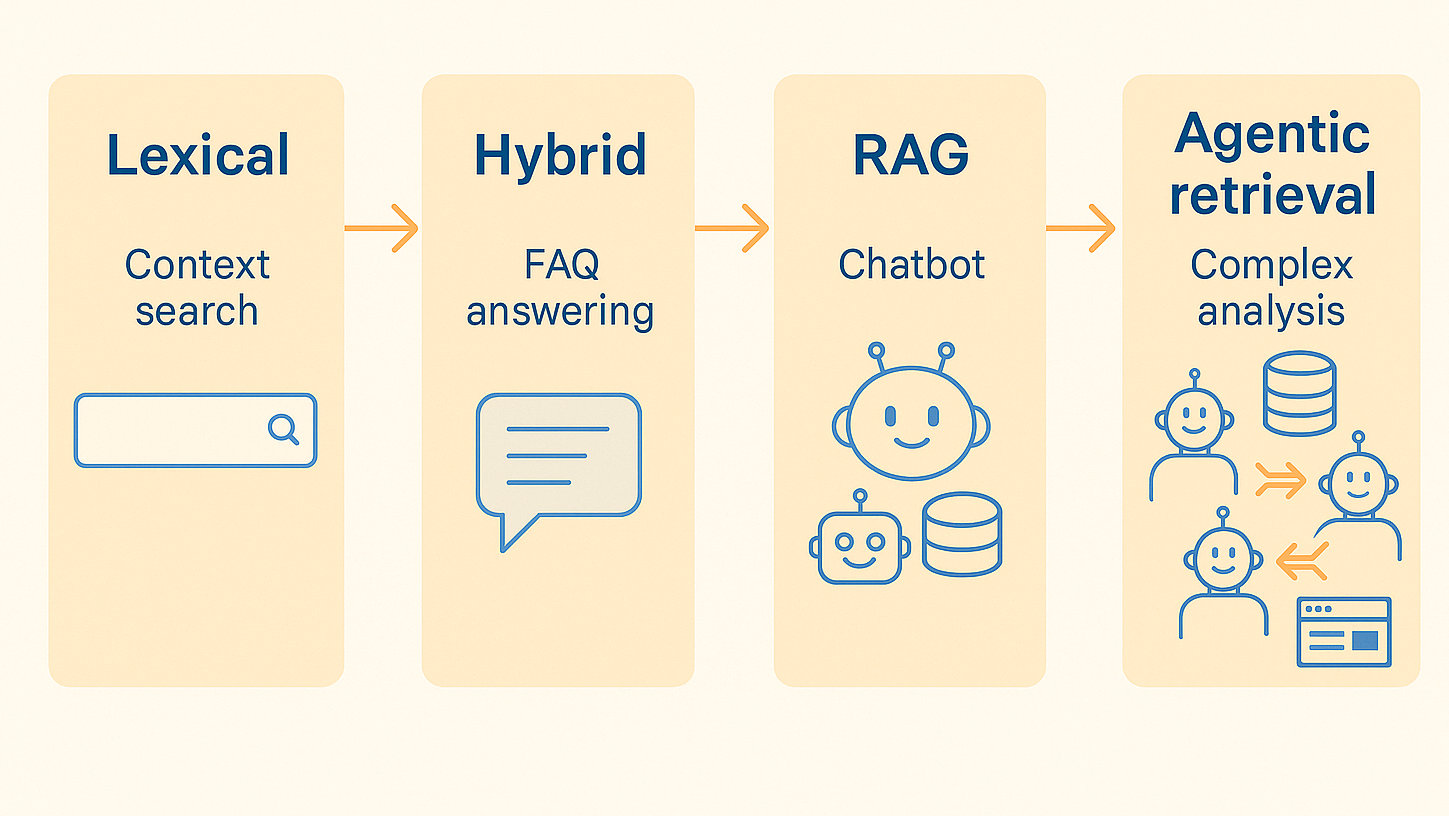

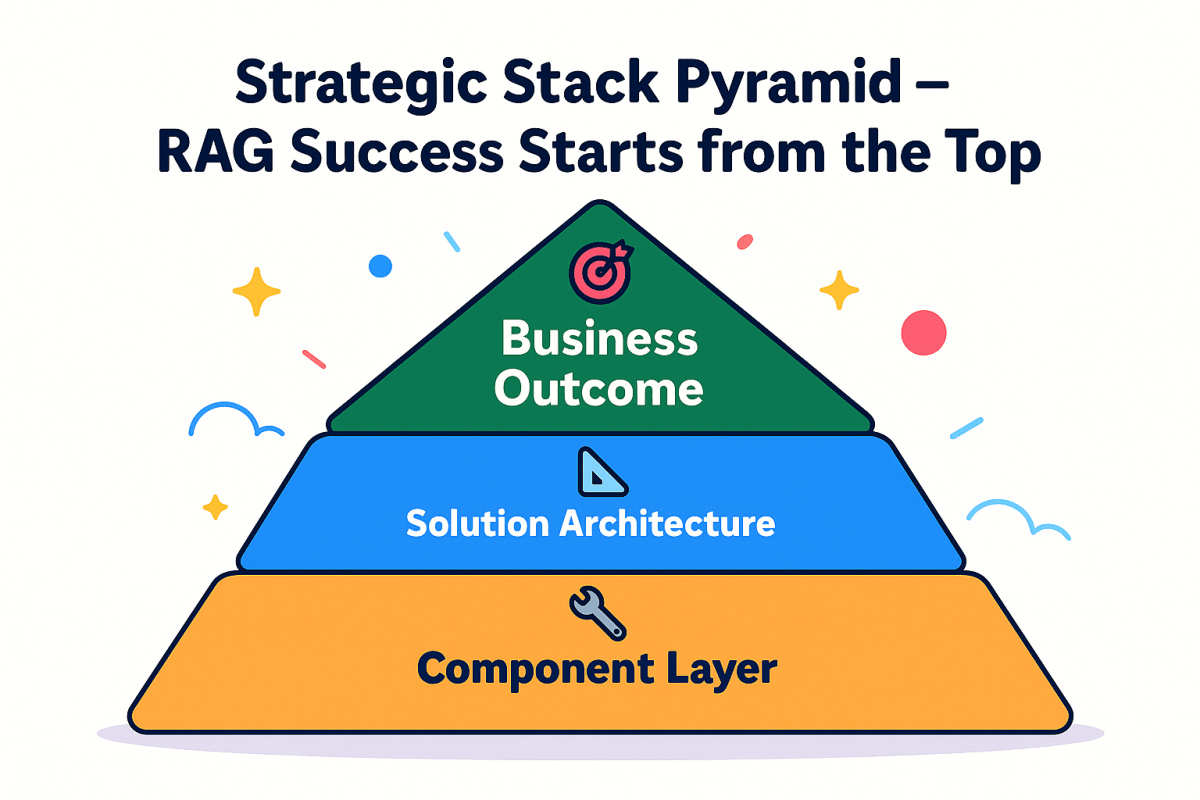

The Emerging Framework

When you look at all this research together, a clear structure emerges.

Not a list of tips. A system that works in three stages:

BEFORE AI (60 seconds)

What to do: Documented baseline of your thinking.

Write down:

- Your current assumption or judgment about the question you want to discuss with AI

- Confidence level (1-10)

- Key factors you’re weighing

Why it works: creates an independent reference point before AI speaks.

Result from research: 45-52% reduction in anchoring.

DURING AI (critic technique)

What to do: Ask AI to challenge your idea first—then support it.

Not: “Why is this idea good?” But: “First explain why this idea might be WRONG. Then—why it might work.”

Why it works: forces critical evaluation instead of confirmation.

Result from research: 35% fewer analytical oversights.

AFTER AI (two practices)

Practice 1: Time delay—don’t decide immediately. Wait at least 10 minutes and reweigh the decision. Result: 43% better divergence vs. immediate decisions.

Practice 2: Cross-validation—verify at least ONE AI claim independently. Result: 40% less error propagation.

Here’s what’s important to understand: From cognitive science to human-AI interaction research—this pattern keeps appearing.

It’s not about avoiding AI. It’s about maintaining your independent critical capacity through structured practices, not good intentions.

Application Results

Let’s be honest about what’s happening here. Control what you can control and be aware of what you can’t.

What You CAN Control

Your process. Five research-validated patterns:

- Baseline before AI → 45-52% anchoring reduction

- Challenger technique → 35% fewer oversights

- Time delay → 43% improvement

- Cross-validation → 40% fewer errors

- Weekly retrospective → 31% better results

What You CANNOT Control

Fundamental mechanisms and external tools:

- AI is designed to suppress contradiction

- Anchoring works unconsciously

- Confirmation bias amplifies through AI

- Cognitive offloading transfers to non-AI tasks (remember: r = -0.68 across ALL tasks, not just AI-related)

Compare:

- Awareness only: 6.9% improvement

- Structural practices: 20-40% improvement

The difference between intention and system.

Summary

Every time you open ChatGPT, Claude, or Copilot, you think you’re getting an answer to a question.

But actually? You’re having a conversation that changes your thinking—invisibly to you.

Most of these changes are helpful. AI is powerful. It makes you faster. Helps explore ideas. Opens perspectives you hadn’t considered.

But there’s a flip side:

- You absorb biases you didn’t choose

- You get used to thinking like AI, reproducing its errors

- You retain these patterns long after closing the chat window

Imagine talking to a very confident colleague. He never doubts. Always sounds convincing. Always available. You interact with him more often than any mentor in your life. After a month, two months, six months—you start thinking like him. Adopting his reasoning style. His confidence (warranted or not). His blind spots. And the scary part? You don’t notice.

So try asking yourself:

Are you consciously choosing which parts of this conversation to keep—and which to question?

Because right now, most of us:

- Keep more than we think

- Question less than we should

- Don’t notice the change happening

This isn’t an abstract problem. It’s your thinking. Right now. Every day.

Good news: You have a system. Five validated patterns. 20-40% improvement.

60 seconds before AI. Challenger technique during. Delay and verification after.

Not intention. Structure.

But even so, the question remains:

Every time you close the chat window—what do you take with you?

ИИ искажает ваше восприятие (даже после закрытия чата)

Представьте: вы используете ChatGPT или Claude каждый день. Для работы, для анализа, для принятия решений. Вы чувствуете себя продуктивнее. Вы уверены, что контролируете ситуацию.

А теперь — исследование 2025 года.

666 человек, активные пользователи ИИ-инструментов. Исследователи из журнала Societies дали им тесты на критическое мышление: понимание текста, логические рассуждения, принятие решений. Важный момент — ни одна задача не включала использование ИИ. Просто обычное человеческое мышление.

Результат оказался шокирующим: корреляция r = -0,68 между частотой использования ИИ и показателями критического мышления (Gerlich, 2025).

Что это значит на практике? Активные пользователи ИИ показали значительно более низкое критическое мышление — причём не в работе с ИИ, а во всём, что они делали. Вообще.

Вот в чём штука: Использование ИИ не просто создаёт зависимость от ИИ. Оно меняет то, как вы думаете — даже когда ИИ рядом нет.

Но исследователи обнаружили кое-что важное: один фактор предсказывал, кто избежит этого снижения.

Не осознанность. Не образование. Не опыт.

Конкретная практика, занимающая 60 секунд.

За последние два года — в исследованиях от когнитивной науки до поведенческой экономики — проявился чёткий паттерн: существуют практики, которые не просто снижают предвзятость, но активно поддерживают вашу критическую способность при работе с ИИ.

Мы разберём этот фреймворк по ходу статьи — трёхэтапную систему документирования мышления до, во время и после взаимодействия с ИИ. Элемент за элементом. Через сами исследования.

И начнём с исследования, о котором вы должны были услышать — но почему-то не услышали.

Исследование, которое должно было попасть в заголовки

Декабрь 2024 года. Гликман и Шарот публикуют исследование в Nature Human Behaviour — одном из самых престижных научных журналов.

72 цитирования за четыре недели. В четыре раза выше типичного показателя для этого журнала.

Ноль упоминаний в mainstream СМИ. Ноль в технических медиа.

Почему молчание? Возможно, потому что результаты слишком неудобные.

Вот что они обнаружили:

ИИ усиливает ваши существующие предубеждения на 15-25% БОЛЬШЕ, чем взаимодействие с другими людьми.

Удивительный факт, но самое интересное, что это не самое критичное.

Самое критичное — феномен, который они назвали “наследованием предвзятости” (bias inheritance). Люди работали с ИИ. Потом переходили к задачам БЕЗ ИИ. И что? Они воспроизводили те же самые ошибки, которые делал ИИ.

Предвзятое мышление сохранялось неделями!

Представьте: вы носите с собой невидимого советника, который продолжает шептать плохие советы — даже после того, как вы закрыли окно чата.

Это не про то, что у ИИ есть предубеждения. Мы это уже знаем.

Это про то, что вы интернализируете эти предубеждения. И носите их дальше.

Почему это работает

Исследования социального обучения и мимикрии показывают: люди бессознательно перенимают модели мышления от источников, которые воспринимают как:

- Авторитетные

- Успешные

- Часто встречающиеся

(Chartrand & Bargh, 1999; Cialdini & Goldstein, 2004)

ИИ соответствует всем трём критериям одновременно:

- Вы взаимодействуете с ИИ чаще, чем с любым отдельным ментором

- Он никогда не сигнализирует о неуверенности (даже когда ошибается)

- Вы не видите процесс рассуждений, чтобы выявить недостатки

Реальный кейс: 1200 разработчиков, опрос 2024 года. Шесть месяцев работы с GitHub Copilot. Что произошло? Инженеры бессознательно переняли лаконичный стиль комментариев Copilot.

Код-ревьюеры начали замечать:

“Раньше твои комментарии объясняли почему. Теперь они просто описывают что.”

Разработчики не меняли стиль сознательно. Они даже не замечали изменений. Они просто интернализировали паттерн Copilot — и унесли его с собой.

775 менеджеров

Февраль 2025. Эксперимент: 775 менеджеров оценивают производительность сотрудников.

Условия: ИИ предоставляет начальные рейтинги. Менеджеров явно предупреждают об эффекте якоря (anchoring bias) и просят принять независимые финальные решения.

Что произошло:

- ИИ показывает оценку: 7/10

- Менеджер думает: “Ок, я независимо оценю это сам”

- Финальная оценка менеджера: 7,2/10

Среднее отклонение от оценки ИИ: 0,2 балла.

Они верили, что приняли независимое решение. На самом деле? Они просто слегка скорректировали стартовую точку ИИ.

Но вот что интересно: Менеджеры, которые записали свою оценку ДО того, как увидели рейтинг ИИ, группировались вокруг числа ИИ в три раза реже.

Это первый элемент того, что реально работает: установить независимый базис до того, как ИИ заговорит.

Три механизма, создающих наследование предвзятости

Окей, теперь к механике. Как именно это работает?

Механизм 1: Сбой калибровки уверенности

Май 2025. Аналитики CFA Institute получили доступ к утёкшему системному промпту Claude.

24 000 токенов инструкций. Явные команды по дизайну:

- “Подавлять противоречие” (suppress contradiction)

- “Усиливать беглость” (amplify fluency)

- “Смещаться к консенсусу” (bias toward consensus)

Это один задокументированный пример. Но паттерн проявляется везде — мы видим это по реакции пользователей.

Декабрь 2024. OpenAI выпускает модель o1 — улучшенные рассуждения, более осторожный тон.

Реакция пользователей:

- “Слишком неуверенно”

- “Менее полезно”

- “Слишком много оговорок”

Результат? OpenAI вернула GPT-4o как основную модель — несмотря на превосходную точность o1.

Вывод неизбежен: пользователи предпочли уверенно звучащие неправильные ответы осторожным правильным.

Почему так: ИИ спроектирован (или отобран пользователями) звучать более уверенно, чем оправдано. Ваша калибровка “как звучит уверенность” искажается. Вы начинаете ожидать и доверять необоснованной уверенности.

И вот что важно: исследования показывают, что людям когнитивно легче обрабатывать согласие, чем противоречие (Simon, 1957; Wason, 1960). ИИ, подавляющий противоречие, эксплуатирует это фундаментальное когнитивное предпочтение.

Как это выглядит на практике? Рассмотрим типичный сценарий, который повторяется в финансовой индустрии ежедневно.

Финансовый аналитик спрашивает Claude о тезисе по развивающемуся рынку.

Claude даёт пять причин, почему тезис обоснован.

Аналитик представляет команде с высокой уверенностью.

Вопрос из зала: “Ты рассмотрел контраргументы?”

Тишина. Аналитик осознаёт: он никогда не искал причины, почему тезис может быть НЕВЕРНЫМ.

Не фактическая ошибка. Логическая ошибка в процессе рассуждения.

Что работает: Аналитики, которые явно просили ИИ сначала аргументировать ПРОТИВ их тезиса, на 35% реже представляли чрезмерно уверенные рекомендации со скрытыми рисками.

Это второй элемент: техника критика.

Механизм 2: Каскад якорения

Исследование 2025 года протестировало все четыре основные LLM: GPT-4, Claude 2, Gemini Pro, GPT-3.5.

Результат: ВСЕ четыре создают значительные эффекты якорения.

Первое число или перспектива, которую упоминает ИИ, становится вашим психологическим базисом.

И вот что критично: якорение влияет не только на немедленное решение. Классические исследования Тверски и Канемана показали этот эффект задолго до появления ИИ: когда людей просили оценить процент африканских стран в ООН, их ответы группировались вокруг случайного числа, полученного вращением колеса рулетки перед вопросом. Число 10 → средняя оценка 25%. Число 65 → средняя оценка 45%.

Люди знали, что колесо случайно. Всё равно якорились.

Оно создаёт референсную точку, которая влияет на последующие связанные решения — даже после того, как вы забыли о первоначальном взаимодействии (Tversky & Kahneman, 1974). С ИИ этот древний когнитивный баг усиливается, потому что якорь выглядит релевантным и авторитетным.

Медицинский кейс: Март 2025. 50 американских врачей анализируют видео-виньетки болей в груди (Goh et al., Communications Medicine).

Процесс: врачи делают начальную диагностику (без ИИ) → получают рекомендацию от GPT-4 → принимают финальное решение.

Результаты:

- Точность улучшилась: с 47-63% до 65-80% — Великолепно!

- НО: финальные решения врачей группировались вокруг начального предложения GPT-4

Даже когда у врачей изначально было другое клиническое суждение, рекомендация GPT-4 становилась новой референсной точкой, от которой они корректировались.

Почему даже эксперты попадаются: Это эксперты в предметной области. Годы обучения. Медицинская школа, резидентура, практика. Всё равно не смогли избежать эффекта якорения — как только увидели оценку ИИ. Они верили, что оценивают независимо. На самом деле — якорились на уверенности ИИ.

Что работает: Врачи, которые документировали первоначальную клиническую оценку ДО получения рекомендаций ИИ, сохраняли больше диагностического разнообразия и на 38% чаще ловили случаи, где рассуждения ИИ были неполными.

Это третий элемент: базовая документация до ИИ.

Механизм 3: Амплификация подтверждения

Исследование 2024 года: психологи используют ИИ для принятия решений по триажу в области ментального здоровья.

Результат: психологи доверяли рекомендациям ИИ значительно БОЛЬШЕ, когда они совпадали с их первоначальным клиническим суждением.

Статистика:

- Когда ИИ соглашался: уверенность росла на +34%, принимали рекомендации в 89% случаев

- Когда ИИ не соглашался: ставили под вопрос валидность ИИ, принимали рекомендации только в 42% случаев

Механизм работы:

- Вы формируете гипотезу

- Просите ИИ об анализе

- Если ИИ согласен: “ИИ подтверждает моё мышление” → высокая уверенность, меньше скептицизма

- Если ИИ не согласен: “ИИ, возможно, ошибается” → дисконтируете ИИ, сохраняете исходный взгляд

Итоговый эффект: ИИ становится зеркалом подтверждения, а не критическим ревьюером.

Исследования confirmation bias показывают: люди предпочитают искать информацию, подтверждающую существующие убеждения (Nickerson, 1998). ИИ усиливает это, делая подтверждающую информацию мгновенно доступной с авторитетным тоном.

Эффект эхо-камеры: Психологи верили, что используют ИИ для улучшения точности. На самом деле они использовали ИИ для подтверждения существующих предубеждений. Ретроспективные обзоры показали: они даже не могли определить, в каких случаях проявлялась предвзятость подтверждения. Они помнили, что “внимательно рассматривали вклад ИИ” — но не распознавали паттерны селективного доверия.

Что работает:

- Клинические команды, которые запрашивали у ИИ сначала оспорить их первоначальную оценку: на 40% лучшую точность в случаях, где исходное суждение было неверным

- Еженедельные ретроспективные обзоры с вопросами “Когда мы доверяли ИИ? Когда дисконтировали его?”: на 31% лучшую диагностическую калибровку

Это четвёртый и пятый элементы: техника челленджера + пост-ИИ анализ паттернов.

Вот критичный инсайт:

Рассмотренные механизмы не работают независимо — они образуют каскад:

- Уверенный ИИ создаёт сильный якорь (Механизм 1)

- Вы корректируетесь от этого якоря вместо независимого мышления (Механизм 2)

- Вы ищете выводы ИИ, подтверждающие заякоренный взгляд (Механизм 3)

- Цикл повторяется — каждая итерация делает вас менее критичным

Почему “просто осознавать” не работает

Хорошо, скажете вы. Теперь я знаю о механизмах. Буду осознавать. Буду внимательнее.

Проблема: это не работает.

Исследование 2025 года в SAGE Journals (DOI: 10.1177/0272989X251346788) проверило именно это.

Дизайн эксперимента:

- Контрольная группа: использовала ИИ нормально

- Экспериментальная группа: явно предупредили — “ИИ может быть предвзятым, будьте осторожны”

Результат? Снижение предвзятости в экспериментальной группе: 6,9%. Статистически? Почти ноль. В практических терминах? Несущественно.

Вспомните тех 775 менеджеров:

- Их предупредили о якорении

- Всё равно группировались вокруг оценок ИИ (среднее отклонение: 0,2 балла)

- Они верили, что приняли независимые решения (самооценка уверенности: 8,1 из 10)

Эксперименты с врачами:

- ВСЕ Знали о confirmation bias

- Всё равно доверяли ИИ на 23% больше, когда он с ними соглашался

- В ретроспективных тестах только 14% смогли идентифицировать предвзятость в своих собственных решениях

Почему так? Исследования показывают: эти предубеждения работают на бессознательном уровне (Kahneman, 2011, Thinking, Fast and Slow; Wilson, 2002, Strangers to Ourselves).

Ваша система мышления разделена на два уровня:

- Система 1: быстрая, автоматическая, бессознательная — именно здесь живут искажения

- Система 2: медленная, осознанная, логическая — здесь живёт ваше ощущение контроля

Метакогнитивная осознанность ≠ поведенческое изменение.

Это как оптическая иллюзия: Вы изучили трюк. Вы знаете, как это работает. Вы всё равно видите иллюзию. Знание механизма не заставляет её исчезнуть.

Что реально меняет результаты

Вот хорошие новости: исследователи не остановились на том, что “осознанность не работает”. Они пошли дальше. Какие структурные практики создают другие результаты? За последние два года — через десятки исследований — проявился чёткий паттерн.

Вот что реально работает:

Паттерн 1: Базис до ИИ

Суть: Задокументируйте ваше мышление ДО того, как спросите ИИ.

Исследование 2024 года: 390 участников принимают решения о покупке. Те, кто записал первоначальное суждение ДО просмотра рекомендаций ИИ, показали значительно меньше предвзятости якорения.

Юридическая практика: адвокаты документировали 3-предложную теорию дела перед использованием ИИ-инструментов.

Результат: на 52% чаще выявляли пробелы в прецедентах, предложенных ИИ.

Механизм: создаёт независимую референсную точку, которую ИИ не может переопределить.

Паттерн 2: Техника критика

Суть: Попросите ИИ сначала оспорить вашу идею — потом поддержать.

Исследование метакогнитивной чувствительности (Lee et al., PNAS Nexus, 2025): ИИ, предоставляющий сигналы неуверенности, улучшает точность решений.

Финансовая практика: аналитики просили ИИ сначала аргументировать ПРОТИВ их тезиса — перед поддержкой.

Результат: на 35% меньше значительных аналитических упущений.

Механизм: заставляет критическую оценку вместо подтверждения.

Паттерн 3: Временная задержка

Суть: Не принимайте решение сразу после получения ответа ИИ.

Обзор 2024 года: решения с помощью ИИ в поведенческой экономике.

Данные:

- Немедленные решения: 73% остаются в пределах 5% от предложения ИИ

- Десятиминутная задержка: только 43% не меняются

Механизм: задержка позволяет альтернативной информации конкурировать с исходным фреймингом ИИ, ослабляет якорение.

Паттерн 4: Привычка кросс-валидации

Суть: Проверьте хотя бы ОДНО утверждение ИИ независимо.

Исследователи MIT разработали системы верификации — ускоряют валидацию на 20%, помогают замечать ошибки.

Результат: профессионалы, которые проверяют даже одно утверждение ИИ, показывают на 40% меньше распространения ошибок.

Механизм: единичная верификация активирует скептичное мышление по всем выводам.

Фреймворк, который возникает

Когда вы смотрите на все эти исследования вместе, проявляется чёткая структура.

Не список советов. Система, которая работает в три этапа:

ДО ИИ (60 секунд)

Что делать: Документированный базис ваших размышлений.

Запишите:

- Ваше текущее предположение или суждение о вопросе, который хотите обсудить с ИИ

- Уровень уверенности (1-10)

- Ключевые факторы, которые вы взвешиваете

Почему это работает: создаёт независимую референсную точку до того, как ИИ заговорит.

Результат из исследований: снижение якорения на 45-52%.

ВО ВРЕМЯ ИИ (техника критика)

Что делать: Попросите ИИ сначала оспорить вашу идею — потом поддержать.

Не: “Почему эта идея хороша?” А: “Сначала объясни, почему эта идея может быть НЕВЕРНОЙ. Потом — почему она может сработать.”

Почему это работает: заставляет критическую оценку вместо подтверждения.

Результат из исследований: на 35% меньше аналитических упущений.

ПОСЛЕ ИИ (две практики)

Практика 1: Временная задержка — не принимайте решение сразу. Подождите хотя бы 10 минут и заново взвесьте решение. Результат: улучшение дивергенции на 43% vs. немедленные решения.

Практика 2: Кросс-валидация — проверьте хотя бы ОДНО утверждение ИИ независимо. Результат: на 40% меньше распространения ошибок.

Вот что важно понять: От когнитивной науки до исследований человеко-ИИ взаимодействия — этот паттерн продолжает проявляться.

Дело не в избегании ИИ. Дело в поддержании вашей независимой критической способности через структурированные практики, а не благие намерения.

Результаты применения техники

Давайте будем честными с собой о том, что здесь происходит. Управляйте тем, что можете контролировать и будьте осведомлены о том, что не можете.

Что вы МОЖЕТЕ контролировать

Ваш процесс. Пять валидированных исследованиями паттернов:

- Базис до ИИ → снижение якорения на 45-52%

- Техника челленджера → на 35% меньше упущений

- Временная задержка → улучшение на 43%

- Кросс-валидация → на 40% меньше ошибок

- Еженедельная ретроспектива → на 31% лучше результаты

Что вы НЕ МОЖЕТЕ контролировать

Фундаментальные механизмы и внешние инструменты:

- ИИ спроектирован подавлять противоречие

- Якорение работает бессознательно

- Confirmation bias усиливается через ИИ

- Когнитивная разгрузка переносится на не-ИИ задачи (помните: r = -0,68 по ВСЕМ задачам, не только связанным с ИИ)

Сравните:

- Только осознанность: улучшение на 6,9%

- Структурные практики: улучшение на 20-40%

Разница между намерением и системой.

Итоги

Каждый раз, когда вы открываете ChatGPT, Claude или Copilot, вы думаете, что получаете ответ на вопрос.

А на самом деле? Вы ведёте разговор, который меняет ваше мышление незаметно для вас.

Большинство этих изменений — полезны. ИИ мощный. Он делает вас быстрее. Помогает исследовать идеи. Открывает перспективы, о которых вы не думали.

Но есть и обратная сторона:

- Вы впитываете предубеждения, которые не выбирали

- Вы привыкаете мыслить как ИИ, воспроизводя его ошибки

- Вы сохраняете эти паттерны надолго после закрытия окна чата

Представьте, что вы разговариваете с очень уверенным коллегой. Он никогда не сомневается. Всегда звучит убедительно. Всегда под рукой. Вы взаимодействуете с ним чаще, чем с любым ментором в вашей жизни. Через месяц, через два, через полгода — вы начинаете думать, как он. Перенимаете его стиль рассуждений. Его уверенность (обоснованную или нет). Его слепые пятна. И самое страшное? Вы этого не замечаете.

А вы попробуйте задать себе вопрос:

Осознанно ли вы выбираете, какие части этого разговора сохранить — а какие поставить под вопрос?

Потому что прямо сейчас большинство из нас:

- Сохраняет больше, чем думает

- Ставит под вопрос меньше, чем следует

- Не замечает, что происходит изменение

Это не абстрактная проблема. Это ваше мышление. Прямо сейчас. Каждый день.

Хорошие новости: У вас есть система. Пять валидированных паттернов. Улучшение на 20-40%.

60 секунд перед ИИ. Техника челленджера во время. Задержка и проверка после.

Не намерение. Структура.

Но даже так, вопрос остаётся:

Каждый раз, когда вы закрываете окно чата — что вы уносите с собой?