When Titans Stumble: The $900 Million Data Mistake 🏦💥

Picture this: One of the world’s largest banks accidentally wires out $900 million. Not because of a cyber attack. Not because of fraud. But because their data systems were so confusing that even their own employees couldn’t navigate them properly.

This isn’t fiction. This happened to Citigroup in 2020. 😱

Here’s the thing about data today: everyone knows it’s valuable. CEOs call it “the new oil.” 🛢️ Boards approve massive budgets for analytics platforms. Companies hire armies of data scientists. The promise is irresistible—master your data, and you master your market.

But here’s what’s rarely discussed: the gap between knowing data is valuable and actually extracting that value is vast, treacherous, and littered with the wreckage of well-intentioned initiatives.

Citigroup should have been the last place for a data disaster. This is a financial titan operating in over 100 countries, managing trillions in assets, employing hundreds of thousands of people. If anyone understands that data is mission-critical—for risk management, regulatory compliance, customer insights—it’s a global bank. Their entire business model depends on the precise flow of information.

Yet over the past decade, Citi has paid over $1.5 billion in regulatory fines, largely due to how poorly they managed their data. The $400 million penalty in 2020 specifically cited “inadequate data quality management.” CEO Jane Fraser was blunt about the root cause: “an absence of enforced enterprise-wide standards and governance… a siloed organization… fragmented tech platforms and manual processes.”

The problems were surprisingly basic for such a sophisticated institution:

- 🔍 They lacked a unified way to catalog their data—imagine trying to find a specific document in a library with no card catalog system

- 👥 They had no effective Master Data Management, meaning the same customer might appear differently across various systems

- ⚠️ Their data quality tools were insufficient, allowing errors to multiply and spread

The $900 million wiring mistake? That was just the most visible symptom. Behind the scenes, opening a simple wealth management account took three times longer than industry standards because employees had to manually piece together customer information from multiple, disconnected systems. Cross-selling opportunities evaporated because customer data lived in isolated silos.

Since 2021, Citi has invested over $7 billion trying to fix these fundamental data problems—hiring a Chief Data Officer, implementing enterprise data governance, consolidating systems. They’re essentially rebuilding their data foundation while the business keeps running.

Citi’s story reveals an uncomfortable truth: recognizing data’s value is easy. Actually capturing that value? That’s where even titans stumble. The tools, processes, and thinking required to govern data effectively are fundamentally different from traditional IT management. And when organizations try to manage their most valuable asset with yesterday’s approaches, expensive mistakes become inevitable.

So why, in an age of unprecedented data abundance, does true data value remain so elusive? 🤔

The “New Oil” That Clogs the Engine ⛽🚫

The “data is the new oil” metaphor has become business gospel. And like oil, data holds immense potential energy—the power to fuel innovation, drive efficiency, and create competitive advantage. But here’s where the metaphor gets uncomfortable: crude oil straight from the ground is useless. It needs refinement, processing, and careful handling. Miss any of these steps, and your valuable resource becomes a liability.

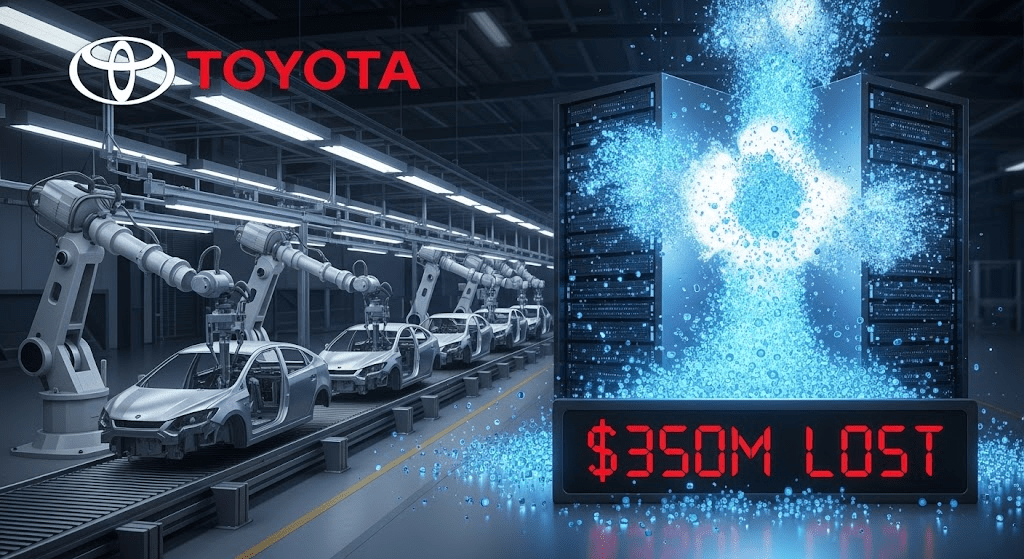

Toyota’s $350M Storage Overflow 🏭💾

Consider Toyota, the undisputed master of manufacturing efficiency. Their “just-in-time” production system is studied in business schools worldwide. If anyone knows how to manage resources precisely, it’s Toyota. Yet in August 2023, all 14 of their Japanese assembly plants—responsible for a third of their global output—ground to a complete halt.

Not because of a parts shortage or supply chain disruption, but because their servers ran out of storage space for parts ordering data. 🤯

Think about that for a moment. Toyota’s production lines, the engines of their enterprise, stopped not from a lack of physical components, but because their digital “storage tanks” for vital parts data overflowed. The valuable data was there, abundant even, but its unmanaged volume choked the system. What should have been a strategic asset became an operational bottleneck, costing an estimated $350 million in lost production for a single day.

The Excel Pandemic Response Disaster 📊🦠

Or picture this scene from the height of the COVID-19 pandemic: Public Health England, tasked with tracking virus spread to save lives, was using Microsoft Excel to process critical test results. Not a modern data platform, not a purpose-built system—Excel.

When positive cases exceeded the software’s row limit (a quaint 65,536 rows in the old format they were using), nearly 16,000 positive cases simply vanished into the digital ether. The “refinery” for life-saving data turned out to be a leaky spreadsheet, and thousands of vital records evaporated past an arbitrary digital limit.

These aren’t stories of companies that didn’t understand data’s value. Toyota revolutionized manufacturing through data-driven processes. Public Health England was desperately trying to harness data to fight a pandemic. Both organizations recognized the strategic importance of their information assets. But recognition isn’t realization.

The Sobering Statistics 📈📉

The numbers tell a sobering story:

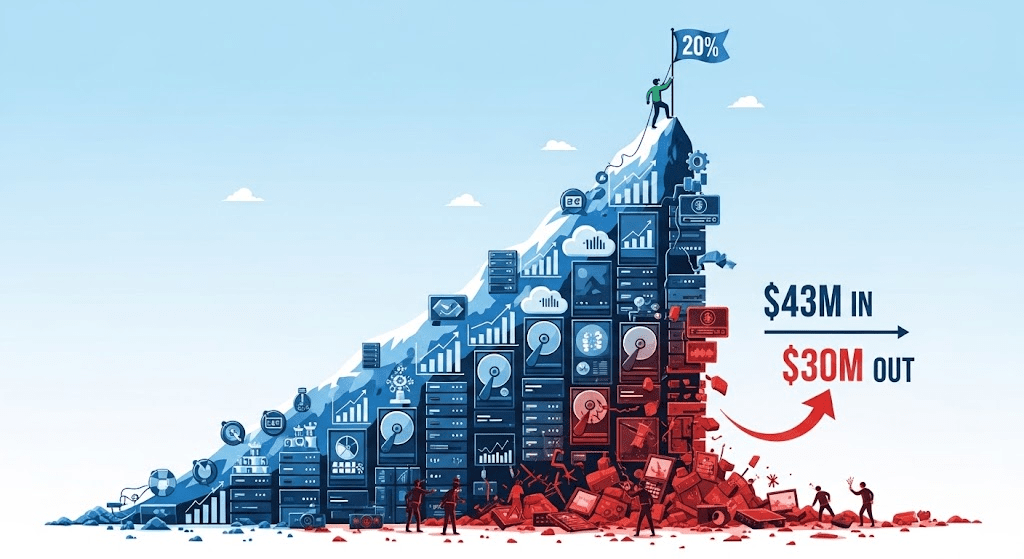

- Despite exponential growth in data volumes—projected to reach 175 zettabytes by 2025—only 20% of data and analytics solutions actually deliver business outcomes

- Organizations with low-impact data strategies see an average investment of 43millionyieldjust yield just $30 million in returns

- They’re literally losing money on their most valuable asset 💸

The problem isn’t the oil—it’s the refinement process. And that’s where most organizations, even the most sophisticated ones, are getting stuck.

The Symptoms: When Data Assets Become Data Liabilities 🚨

If you’ve worked in any data-driven organization, these scenarios will feel painfully familiar:

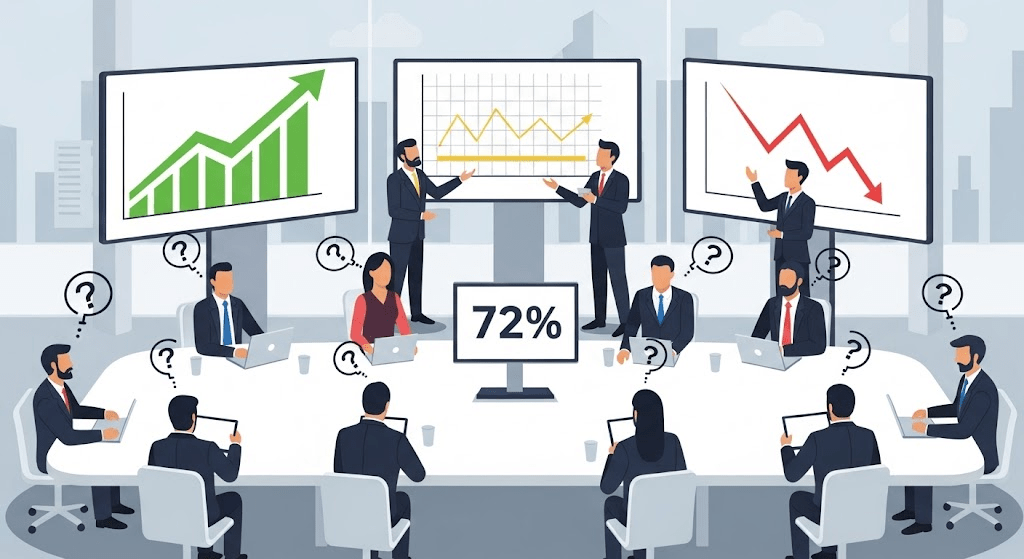

🗣️ The Monday Morning Meeting Meltdown

Marketing bursts in celebrating “record engagement” based on their dashboard. Sales counters with “stagnant conversions” from their system. Finance presents “flat growth” from yet another source. Three departments, three “truths,” one confused leadership team.

The potential for unified strategic insight drowns in a fog of conflicting data stories. According to recent surveys, 72% of executives cite this kind of cultural barrier—including lack of trust in data—as the primary obstacle to becoming truly data-driven.

🤖 The AI Project That Learned All the Wrong Lessons

Remember that multi-million dollar AI initiative designed to revolutionize customer understanding? The one that now recommends winter coats to customers in Miami and suggests dog food to cat owners? 🐕🐱

The “intelligent engine” sputters along, starved of clean, reliable data fuel. Unity Technologies learned this lesson the hard way when bad data from a large customer corrupted their machine learning algorithms, costing them $110 million in 2022. Their CEO called it “self-inflicted”—a candid admission that the problem wasn’t the technology, but the data feeding it.

📋 The Compliance Fire Drill

It’s audit season again. Instead of confidently demonstrating well-managed data assets, teams scramble to piece together data lineage that should be readily available. What should be a routine verification of good governance becomes a costly, reactive fire drill. The value of trust and transparency gets overshadowed by the fear of what auditors might find in the data chaos.

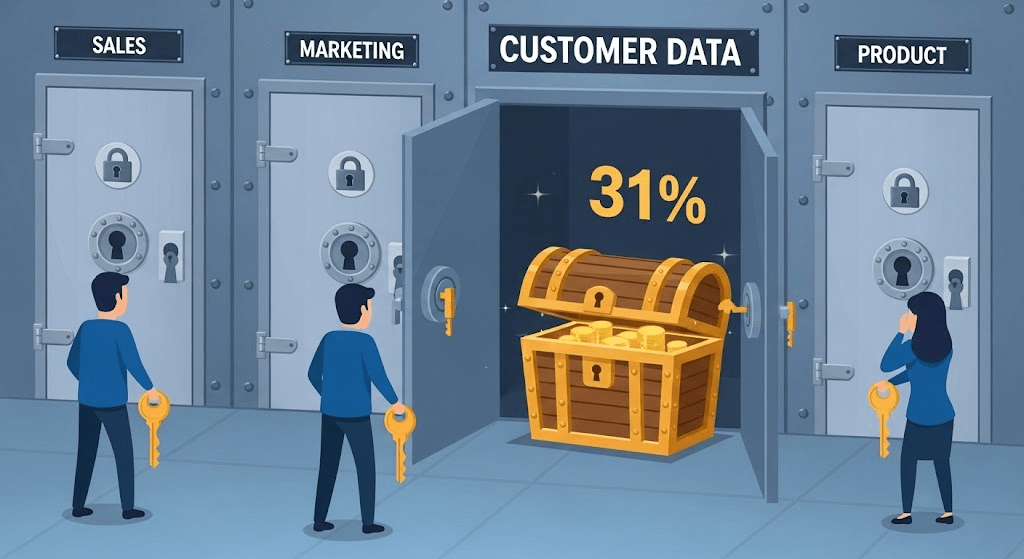

💎 The Goldmine That Nobody Can Access

Your organization sits on a treasure trove of customer data—purchase history, preferences, interactions, feedback. But it’s scattered across departmental silos like a jigsaw puzzle with pieces locked in different rooms.

- The sales team can’t see the full customer journey 🛤️

- Marketing can’t personalize effectively 🎯

- Product development misses crucial usage patterns 📱

Only 31% of companies have achieved widespread data accessibility, meaning the majority are sitting on untapped goldmines.

⏰ The Data Preparation Time Sink

Your highly skilled data scientists—the ones you recruited from top universities and pay premium salaries—spend 62% of their time not building sophisticated models or generating insights, but cleaning and preparing data.

It’s like hiring a master chef and having them spend most of their time washing dishes. 👨🍳🍽️ The opportunity cost is staggering: brilliant minds focused on data janitorial work instead of value creation.

The Bottom Line 📊

These aren’t isolated incidents. They’re symptoms of a systemic problem: organizations that recognize data’s strategic value but lack the specialized approaches needed to extract it. The result? Data becomes a source of frustration rather than competitive advantage, a cost center rather than a profit driver.

The most telling statistic? Despite all the investment in data initiatives, over 60% of executives don’t believe their companies are truly data-driven. They’re drowning in information but starving for insight. 🌊📊

Why Yesterday’s Playbook Fails Tomorrow’s Data 📚❌

Here’s where many organizations go wrong: they try to manage their most valuable and complex asset using the same approaches that work for everything else. It’s like trying to conduct a symphony orchestra with a traffic warden’s whistle—the potential for harmony exists, but the tools are fundamentally mismatched. 🎼🚦

Traditional IT governance excels at managing predictable, structured systems. Deploy software, follow change management protocols, monitor performance, patch as needed. These approaches work brilliantly for email servers, accounting systems, and corporate websites.

But data is different. It’s dynamic, interconnected, and has a lifecycle that spans creation, transformation, analysis, archival, and deletion. It flows across systems, changes meaning in different contexts, and its quality can degrade in ways that aren’t immediately visible.

The Knight Capital Catastrophe ⚔️💥

Consider Knight Capital, a sophisticated financial firm that dominated high-frequency trading. They had cutting-edge technology and rigorous software development practices. Yet in 2012, a routine software deployment—the kind they’d done countless times—triggered a catastrophic failure.

Their trading algorithms went haywire, executing millions of erroneous trades in 45 minutes and losing $460 million. The company was essentially destroyed overnight.

What went wrong? Their standard software deployment process failed to account for data-specific risks:

- 🔄 Old code that handled trading data differently was accidentally reactivated

- 🧪 Their testing procedures, designed for typical software changes, missed the unique ways this change would interact with live market data

- ⚡ Their risk management systems, built for normal trading scenarios, couldn’t react fast enough to data-driven chaos

Knight Capital’s story illustrates a crucial point: even world-class general IT practices can be dangerously inadequate when applied to data-intensive systems. The company had excellent software engineers, robust development processes, and sophisticated technology. What they lacked were data-specific safeguards—the specialized approaches needed to manage systems where data errors can cascade into business catastrophe within minutes.

The Pattern Repeats 🔄

This pattern repeats across industries. Equifax, a company whose entire business model depends on data accuracy, suffered coding errors in 2022 that generated incorrect credit scores for hundreds of thousands of consumers. Their general IT change management processes failed to catch problems that were specifically related to how data flowed through their scoring algorithms.

Data’s Unique Challenges 🎯

The fundamental issue is that data has unique characteristics that generic approaches simply can’t address:

- 📊 Volume and Velocity: Data systems must handle massive scale and real-time processing that traditional IT rarely encounters

- 🔀 Variety and Complexity: Data comes in countless formats and structures, requiring specialized integration approaches

- ✅ Quality and Lineage: Unlike other IT assets, data quality can degrade silently, and understanding where data comes from becomes critical for trust

- ⚖️ Regulatory and Privacy Requirements: Data governance involves compliance challenges that don’t exist for typical IT systems

Trying to govern today’s dynamic data ecosystems with yesterday’s generic project plans is like navigating a modern metropolis with a medieval map—you’re bound to get lost, and the consequences can be expensive. 🗺️🏙️

The solution isn’t to abandon proven IT practices, but to extend them with data-specific expertise. Organizations need approaches that understand data’s unique nature and can govern it as the strategic asset it truly is.

The Specialized Data Lens: From Deluge to Dividend 🔍💰

So how do organizations bridge this gap between data’s promise and its realization? The answer lies in what we call the “specialized data lens”—a fundamentally different way of thinking about and managing data that recognizes its unique characteristics and requirements.

This isn’t about abandoning everything you know about IT and business management. It’s about extending those proven practices with data-specific approaches that can finally unlock the value sitting dormant in your organization’s information assets.

The Two-Pronged Approach 🔱

The specialized data lens operates on two complementary levels:

🛠️ Data-Specific Tools and Architectures for Value Extraction

Just as you wouldn’t use a screwdriver to perform surgery, you can’t manage modern data ecosystems with generic tools. Organizations need purpose-built solutions:

- Data catalogs that make information discoverable and trustworthy

- Master data management systems that create single sources of truth

- Data quality frameworks that prevent the “garbage in, garbage out” problem

- Modern architectural patterns like data lakehouses and data fabrics that can handle today’s volume, variety, and velocity requirements

→ In our next post, we’ll dive deep into these specialized tools and show you exactly how they work in practice.

📋 Data-Centric Processes and Governance for Value Realization

Even the best tools are useless without the right processes. This means:

- Data stewardship programs that assign clear ownership and accountability

- Quality frameworks that catch problems before they cascade

- Proven methodologies like DMBOK (Data Management Body of Knowledge) that provide structured approaches to data governance

- Embedding data thinking into every business process, not treating it as an IT afterthought

→ Our third post will explore these governance frameworks and show you how to implement them effectively.

What’s Coming Next 🚀

In this series, we’ll explore:

- 🔧 The Specialized Toolkit – Deep dive into data-specific tools and architectures that actually work

- 👥 Mastering Data Governance – Practical frameworks for implementing effective data governance without bureaucracy

- 📈 Measuring Success – How to prove ROI and build sustainable data programs

- 🎯 Industry Applications – Real-world case studies across different sectors

The Choice Is Yours ⚡

Here’s the truth: the data paradox isn’t inevitable. Organizations that adopt specialized approaches to data management don’t just survive the complexity—they thrive because of it. They turn their data assets into competitive advantages, their information into insights, and their digital exhaust into strategic fuel.

The question isn’t whether your organization will eventually need to master data governance. The question is whether you’ll do it proactively, learning from others’ expensive mistakes, or reactively, after your own $900 million moment.

What’s your data story? Share your experiences with data challenges in the comments below—we’d love to hear what resonates most with your organization’s journey. 💬

Ready to transform your data from liability to asset? Subscribe to our newsletter for practical insights on data governance, and don’t miss our upcoming posts on specialized tools and governance frameworks that actually work. 📧✨

Next up: “Data’s Demands: The Specialized Toolkit and Architectures You Need” – where we’ll show you exactly which tools can solve the problems we’ve outlined today.